“DeepPhase: Periodic Autoencoders for Learning Motion Phase Manifolds” © 2022 University of Edinburgh, University of Hong Kong

Character animation is a time-consuming task, and never in the history of AI has there been a complete method to make character animation a more simple process. Research Scientist Sebastian Starke (Meta Reality Labs) and his team have created a new method that breaks down character animation and simplifies the process.

Here, Sebastian takes a deep dive into the method and explains how it will shape the future of character animation.

SIGGRAPH: Share some background about “DeepPhase: Periodic Autoencoders for Learning Motion Phase Manifolds.” What inspired this research?

Sebastian Starke (SS): There has been a lot of work on data-driven animation systems in the last few years, and one thing that became clear very early on was that using phases can be a powerful inductive bias that greatly helps synthesize high-quality character movements. The main benefit of the phase is that it aligns similar poses in motion capture data through unique timing variables which always progresses forward, just as time does in nature. Surprisingly this can be a difficult thing for AI systems to understand.

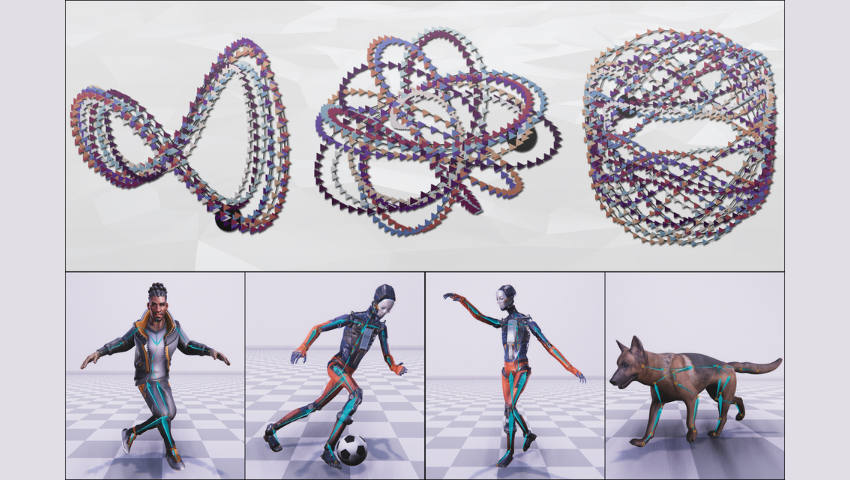

Until DeepPhase, most methods that used some type of phase alignment had to do some sort of handcrafted labeling of the phase, which restricted the utility to the specific problems being explored. Unfortunately, this caused phases to be considered as a non-general concept for character animation, due to the different techniques required for computing phases for different movements. It was clear that if we had a system that could compute motion phases for arbitrary movements without manual supervision, it would be a great win for the animation community. Therefore, the main aim of this research was to figure out a way to learn phase variables directly from data, which then allows us to apply this powerful inductive bias across a large range of complex movements and character morphologies using a single method and with that to make phases a general concept for character animation.

SIGGRAPH: Describe the process of creating the Periodic Autoencoder. How does it learn periodic features from large, unstructured motion datasets?

SS: The core concept of the Periodic Autoencoder (PAE) model is to find a transformation that expresses character movements as a multi-dimensional function of time. The basic idea is to learn a neural network that auto encodes the given motion curves (i.e. joint velocities) and to ensure that the reconstruction must be done through a bottleneck representation in the form of multiple sinusoidal functions. Since the amplitude, frequency and offset for each curve are computed by applying Fourier transformation over the latent curves, the only variable the network effectively needs to learn is the timing (which is the phase shift) for each function. This induces learning the space-time alignment between motion and phase.

SIGGRAPH: What problems does the Periodic Autoencoder solve, and how do you envision it being used in the future?

SS: The main problem the Periodic Autoencoder solves is removing the need for constructing dataset-specific ways to extract phase variables. By learning from the data it opens up the use of neural animation systems for previously unexplored tasks and allows us to model complex movements which don’t have immediately obvious local phase cycles (such as dancing). We think there is great potential for such systems to improve the quality and/or computational cost in animation systems (neural or motion matching) and we are also hopeful that the Periodic Autoencoder may find use in other domains which have temporal signals expressing some joint periodicity.

SIGGRAPH: Congratulations on receiving one of the SIGGRAPH 2022 Technical Papers Best Paper Awards! What does this recognition mean to you?

SS: Thank you so much! It’s obviously a great honor to receive a Technical Papers Best Paper Award at SIGGRAPH! We are glad that this work has received such recognition from the community and hope this award will encourage others to explore Periodic Autoencoding for their use cases. It is also nice to see how this initially exotic idea of using phases has evolved over the past years and has now manifested itself as a technique that is acknowledged by the community and appears a more and more promising direction to pursue in the future.

SIGGRAPH: SIGGRAPH 2022 was excited to introduce roundtable sessions for Technical Papers. Talk about your interactions with SIGGRAPH participants. Do any key moments stand out?

SS: The roundtable sessions this year were an interesting new concept where more talks could be given in one session in a compact format. At the same time, the audience got the chance to ask more in-depth questions and have more interactive conversations with the presenters after their talks at their designated poster areas. In our case, we had a lot of people asking a range of very good questions and could nicely discuss with them, something we believe would have been more difficult in the previous format.

SIGGRAPH: What advice do you have for someone looking to submit to Technical Papers in the future?

SS: Don’t skimp on the demo! It is true that polishing results can be quite tedious and should never be considered a substitute for an original, creative, and amazing idea. However, computer graphics research – at its very core – lives for creating compelling visuals that demonstrate how a new technology pushes the boundaries of what is possible. Also, don’t be afraid to try something new and outside the box. If you are absolutely convinced of some idea and may receive some initial pushback, perhaps invest your time in improving or showcasing your idea better rather than looking for alternative and perhaps more streamlined paths. Oh, and try to make sure there are no bugs in your code.

Until 31 October, you can still experience SIGGRAPH 2022 on the virtual conference platform! Access some of the best content recorded in Vancouver, hours of on-demand content, the latest research from Technical Papers, Art Papers, and Posters, and more.

Sebastian Starke is a Research Scientist at Meta Reality Labs where he applies neural networks for creating realistic movements for virtual avatars. He received his PhD from the University of Edinburgh and worked as a Sr. AI Scientist at Electronic Arts, where he utilized his research to enhance character control techniques in video games. Sebastian is generally interested in applying deep learning for character animation tasks and has published articles at SIGGRAPH, IROS, ICRA, CEC, and TEVC, been a speaker at GDC, and got his work featured by different media channels such as CNET, Inverse, NVIDIA GTC, and Two-Minute-Papers.

Ian Mason is a postdoctoral researcher in Brain & Cognitive Sciences at MIT. Currently, he researches the understanding of neural networks and how to use this understanding to improve few-shot, out-of-distribution, and continual learning behaviors. He did his PhD at the University of Edinburgh where he worked on machine learning for computer animation, stylistic motion generation, and domain adaptation.

Taku Komura joined Hong Kong University in 2020. Before joining HKU, he worked at the University of Edinburgh (2006-2020), City University of Hong Kong (2002-2006), and RIKEN (2000-2002). He received his BSc, MSc and PhD in Information Science from University of Tokyo. His research has focused on data-driven character animation, physically-based character animation, crowd simulation, 3D modeling, cloth animation, anatomy-based modeling and robotics. Recently, his main research interests have been on physically-based animation and the application of machine learning techniques for animation synthesis. He received the Royal Society Industry Fellowship (2014) and the Google AR/VR Research Award (2017).