Imagine you are deciding between two different movies. Five of your friends say movie A is nothing special, but definitely above average. Of those same five friends, four tell you movie B is amazing, but one says it is a bit below average and wouldn’t recommend it.

Which movie would you choose to see? For me, the clear answer is movie B. I’d prefer an 80% chance of seeing a great movie than a 100% of one that is just pretty good. Yet, if you rely on the popular movie review website Rotten Tomatoes, you would be steered toward movie A. This is unfortunate, and a result of bad statistical methodology.

Millions of people rely on Rotten Tomatoes, but not many of them know how it actually works. Rotten Tomatoes scores are calculated in the simplest of ways: the share of positive reviews a movie received by well-established film critics. For example, at the time of writing, Aquaman had received 312 reviews from Rotten Tomatoes-approved critics. 202 of these reviews were deemed positive, so the trident-toting superhero flick gets a Rotten Tomatoes score of 65% (202 divided by 312). This score does not distinguish between an extremely good review and a barely positive one.

That is crazy. As a data analyst, if I was trying to assess the quality of a product, I would never take a set of nuanced reviews and turn them into what statisticians call “binaries” (yes or no, 1 or 0, positive or negative). By doing it this way, you lose tons of pertinent information. Instead, I would try to assess the positivity of the review on a continuous scale, like 0-100 or A-F or even 1-5 clamshells, and then take the average or median of those scores.

Luckily, there is a review website that does just that: Metacritic. Whatever the other merits of these sites, Metacritic’s method of scoring movies is simply better than Rotten Tomatoes’.

Metacritic takes reviews from critics, gives them a 0-100 score, and then averages those scores. Metacritic has a higher threshold for the renown of the critic whose reviews are considered for the site, so while the Rotten Tomatoes’ score for Aquaman includes the reviews of 312 critics, Metacritic only uses 49. (The movie got a score of 55.) Also, as Allison Wilkinson explains in Vox, Metacritic gives more weight in its average to the most highly respected critics, like those from the New York Times. Reviewers who disagree with the rating that Metacritic assigns to their review can have it changed (the same is true for Rotten Tomatoes).

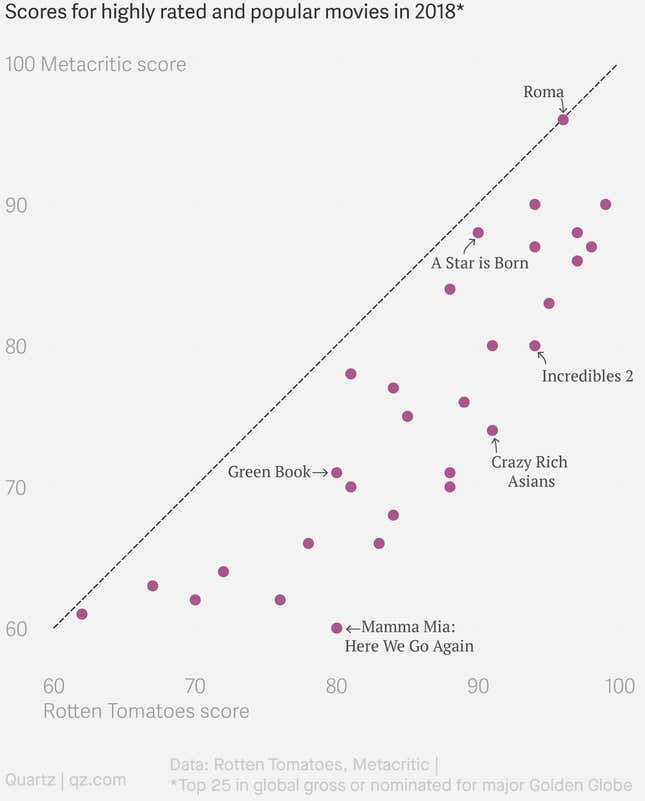

The chart below shows the Metacritic and Rotten Tomatoes scores for movies that were either in the top 25 in global revenues in 2018 or were nominated for a Golden Globe in a major category. The movies also had to receive a score above 60 on both sites.

A comparison of the Metacritic and Rotten Tomatoes scores for the hits Crazy Rich Asians and A Star is Born reveals the differences between the two sites. Crazy Rich Asians, a fun romantic comedy, scored a 91 on Rotten Tomatoes, while A Star is Born, a leading contender to win Best Picture at the Academy Awards, received a 90. A virtual tie. On Metacritic, by contrast, Crazy Rich Asians got a 74, compared with an 88 for A Star is Born. Both are pleasant movies, so I can see how going by just yes or no answers, they could be rated similarly. But to me and most critics, A Star is Born is a much higher quality movie. If I could only see one of them, it would be wise to go with Gaga.

My only complaint about Metacritic is that it has kept its exact methodology proprietary, so others can’t copy the calculations. It would be better if this was public, so users could assess whether they tend to agree with the reviewers given the most weight in the site’s calculations. If a reader is really curious, they can search for a particular movie and see which reviewer gave what score, but that’s a laborious process.

Statistics are a powerful tool for assessing culture, but only when used properly. The next time you are deciding which movie to see, if you want to see something great, and not just good, consider what goes into that review sites’ calculations.