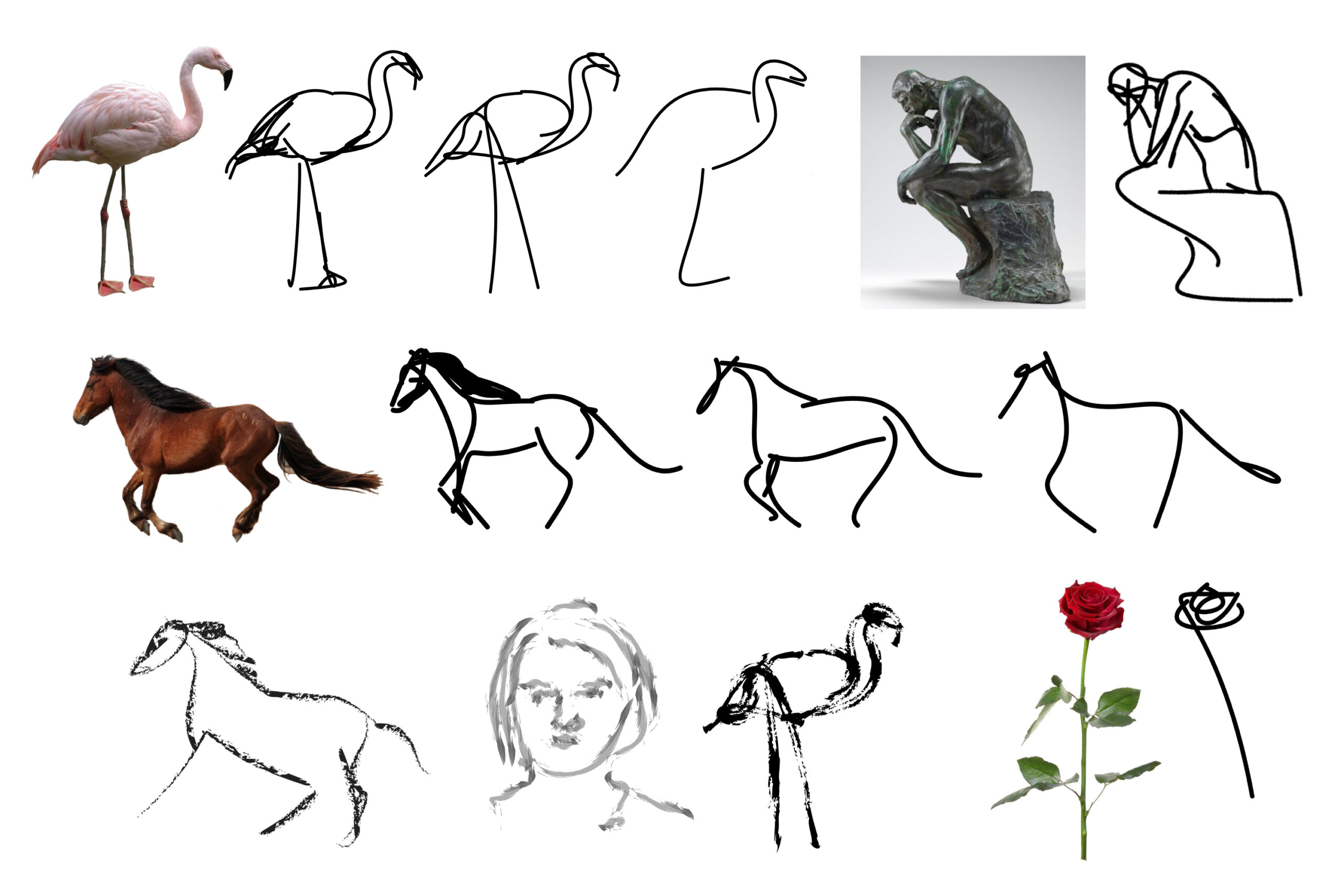

“The Thinker (Le Penseur)” – ©Gift of Mrs. John W. Simpson. public domain, national gallery of art horse – ©”Wild Horses” by firelizard5. Image from search.openverse.engineering This image was marked with a CC BY 2.0 license. rose – ©AndreaA – Can Stock Photo Inc. Flamingo from freepikpsd.com [Free for commercial use] via (https://bit.ly/3F6tipS);

Abstraction is at the heart of sketching due to the simple and minimal nature of line drawings. Abstraction entails identifying the essential visual properties of an object or scene, which requires semantic understanding and prior knowledge of high-level concepts. Therefore, abstract depictions are challenging for artists, and even more so for machines. SIGGRAPH caught up with the creators of CLIPasso, an object-sketching method that can achieve different levels of abstraction, guided by geometric and semantic simplifications, in which they utilize the remarkable ability of CLIP (Contrastive-Language-Image-Pretraining) to distill semantic concepts from sketches and images alike.

SIGGRAPH: Share some background about “CLIPasso.” What inspired the research?

Yael Vinker (YV): In this work, we examine a core question in the field of computational arts — are computers capable of understanding and creating abstractions? Abstraction entails identifying an object’s or scene’s essential visual properties, which requires semantic understanding and prior knowledge of high-level concepts. CLIPasso is a method to convert an image of an object into a sketch, allowing for varying levels of abstraction, while preserving the key visual features of the given object.

This research was inspired by my experience as an amateur artist. In my spare time, I enjoy sketching, and I once attempted to draw a specific building from my porch, but it was very difficult for me. When I used my pen to transfer what my eyes observed, there was a large gap between my observation and the resulting sketch. Having come across this difficulty, I started reading about the biases people possess that prevent them from drawing precisely. As a result, I decided to develop a sketching algorithm that will have the social biases that we have as humans, and that can express them through sketching in a similar way as we do. Over time, this project evolved and changed a lot, but the original idea remains.

SIGGRAPH: Let’s get technical. How did you ensure this method can achieve different levels of abstraction?

YV: Our method relies on a recently developed language-image model called CLIP, which has the capability of extracting meaningful features from multi-modal images. By using CLIP, we are able to extract features from both natural images and sketches without having to train a dedicated model specifically on sketch data.

We define a sketch as a set of strokes, and we optimize the parameters of the strokes using CLIP. By changing the number of strokes available to our optimization scheme, we can control the level of abstraction. If we decrease the number of strokes, this forces the model to express a stronger abstraction, since it only has a limited number of strokes to convey the same concept. The model chooses how to best utilize its limited budget of strokes, resulting in the emergence of abstraction.

SIGGRAPH: How is “CLIPasso” different from traditional sketching methods?

YV: In freehand sketch generation, the goal is to produce sketches that are abstract in terms of structure and semantic interpretation, mimicking the style of a human. Existing approaches to this problem typically utilize large datasets of sketches that have been drawn by humans. Deep neural networks are then used to learn the statistics of these datasets and generate new samples on the basis of this information. Various datasets are available, and some are very abstract, such as sketches created based on a text prompt, while others are more concrete, illustrating specific instances. Existing approaches relying on such datasets are limited by the style and level of abstraction of sketches observed. In most cases, these methods are limited to the categories introduced during training.

In our proposal, we use the CLIP model, and the style is defined by our parametric representation, which can be easily modified in a post-processing stage. As a result, our method is not limited to a particular style and can produce multiple levels of abstraction without the need to train repeatedly for different styles or abstraction levels. In addition, we can handle images of unique categories such as drawings, sculptures, etc., which are not normally included in common sketch databases.

SIGGRAPH: Congratulations on receiving one of the SIGGRAPH 2022 Technical Papers Best Paper Awards! What does this representation mean to you?

YV: My excitement at receiving such an award is beyond words. This is a huge honor for me and for the team. We are delighted that the community appreciates our work, and that all the hard work and long hours have paid off. Furthermore, it is exciting for me to see people using our tool and building on it in the future. As a researcher, this is of course exciting.

SIGGRAPH: What is the next step for your “CLIPasso” research?

YV: The recent developments in generative models fascinate me. I am very happy to live in an era where computational models are so powerful, enabling the creation of images that are realistic, creative, and of high quality. In particular, I am interested in generative processes that involve abstraction, and I want to develop tools and algorithms that can help and inspire artists and designers in their work.

SIGGRAPH: What advice do you have for researchers who are considering submitting to SIGGRAPH Technical Papers in the future?

YV: I would advise them to be passionate about their research and investigate what they love and find exciting.

Ready to showcase your leading and innovative research? Submit to the SIGGRAPH 2023 Technical Papers program by 24 January.

Yael Vinker is a PhD student at Tel Aviv University, advised by Prof. Daniel Cohen-Or and Prof. Ariel Shamir. She is currently a student researcher at Google, in the Creative Camera team. She received her B.Sc. in computer science from the Hebrew University of Jerusalem. During her B.Sc., she also studied visual communication at Bezalel Academy of Arts and Design. She was an intern at EPFL (VILAB), supervised by Prof. Amir Zamir. Prior to that she was a research intern at Disney Research, Zurich. Her research interests are computer graphics, image processing, and machine learning. In her research, she aims to combine her passion for arts and design.

Ehsan Pajouheshgar is a PhD student at Image and Visual Representation Laboratory (IVRL) EPFL, where he is advised by Prof. Sabine Susstrunk. He received his B.Sc. degree in software engineering from Sharif University of Technology in Iran. His research focuses on creating compact and differentiable image and video parameterizations.

Jessica Y. Bo is a masters student in mechanical engineering at ETH Zurich and previously a research intern in the VILAB group at EPFL. Her research interest is in the human-centered design of intelligent systems and devices, which combines artificial intelligence, human cognition, and user-centered design principles to create technologies for human benefit. She currently works on deep learning and human-computer interactions for healthcare applications as a visiting student at MIT. She also holds an undergraduate degree in mechanical and biomedical engineering from the University of British Columbia, where her work delved into medical device design.

Roman Bachmann is an EPFL PhD student at VILAB, where he is advised by Prof. Amir Zamir. He received his M.Sc. degree in data science at EPFL, where he also previously completed his B.Sc. in computer science. His research focuses on learning good multi-modal priors for efficient visual transfer learning.

Amit Bermano is an assistant professor at the Blavatnik School of Computer Science in Tel-Aviv University. His research focuses on computer graphics, computer vision, and copmutational fabrication (3D printing). Previously, he was a postdoctoral researcher at the Princeton Graphics Group, hosted by Prof. Szymon Rusinkiewicz and Prof. Thomas Funkhouser. Before that, he was a postdoctoral researcher at Disney Research Zurich. He conducted his PhD studies at ETH Zurich under the supervision of Prof. Dr. Markus Gross, in collaboration with the computational materials group of Disney Research Zurich.

Daniel Cohen-Or is a professor at the Department of Computer Science and The Isaias Nizri Chair in Visual Computing. He received his PhD from the Department of Computer Science (1991) at State University of New York at Stony Brook. He was on the editorial board of several international journals including CGF, IEEE TVCG, The Visual Computer, and ACM TOG, and regularly served as a member of the program committees of international conferences. He is the inventor of RichFX and Enbaya technologies. He was the recipient of the Eurographics Outstanding Technical Contributions Award in 2005, ACM SIGGRAPH Computer Graphics Achievement Award in 2018. In 2012, he received The People’s Republic of China Friendship Award. In 2015, he was named a Thomson Reuters Highly Cited Researcher. In 2016, he became an adjunct professor at the Simon Fraser University In 2019, he won The Kadar Family Award for Outstanding Research. In 2020, he received The Eurographics Distinguished Career Award.

Amir Zamir is an assistant professor of computer science at the Swiss Federal Institute of Technology (EPFL). His research interests are in computer vision, machine learning, and perception-for-robotics. Prior to joining EPFL in 2020, he was with UC Berkeley, Stanford, and UCF. He has been recognized with the CVPR 2018 Best Paper Award, CVPR 2016 Best Student Paper Award, CVPR 2020 Best Paper Award Nomination, SIGGRAPH 2022 Best Paper Award, NVIDIA Pioneering Research Award 2018, PAMI Everingham Prize 2022, and ECCV/ECVA Young Researcher Award 2022. His research has been covered by popular press outlets, such as The New York Times or Forbes.

Prof. Ariel Shamir is the former Dean of the Efi Arazi School of Computer Science at Riechmann University (the Interdisciplinary Center) in Israel. He received his PhD in computer science in 2000 from the Hebrew University in Jerusalem and spent two years as postdoc at the University of Texas in Austin. Prof. Shamir has numerous publications and a number of patents and was named one of the most highly cited researchers on the Thomson Reuters list in 2015. He has a broad commercial experience consulting various companies including Disney research, Mitsubishi Electric, PrimeSense (now Apple), Verisk, Donde (now Shopify), and more. Prof. Shamir specializes in computer graphics, image processing, and machine learning. He is a member of the ACM SIGGRAPH, IEEE Computer, AsiaGraphics, and EuroGraphics associations.