Image Credit: © Bernhard Riecke, 2022

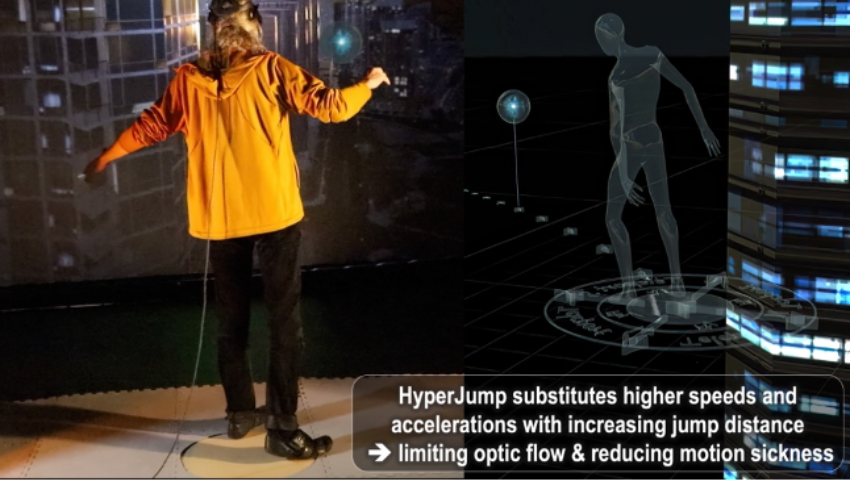

SIGGRAPH sat down with the team behind one of this year’s Immersive Pavilion projects, “HyperJumping in Virtual Vancouver: Combating Motion Sickness by Merging Teleporting and Continuous VR Locomotion in an Embodied Hands-free VR Flying Paradigm,” to talk about this immersive experience and what participants can expect at SIGGRAPH 2022. Read on to learn more.

SIGGRAPH: Tell us about the process of developing “HyperJumping in Virtual Vancouver.” What inspired your team to pursue the project?

Bernhard Riecke (BR): Having researched VR since 1995, cybersickness was one of my earliest experiences and annoyances with VR. Initially a lot of cybersickness was associated with technical issues such as head-mounted display (HMD) tracking inaccuracies and latencies. But even after those technical challenges were increasingly taken care of by the improvements in technology, cybersickness remained one of the key challenges preventing the widespread adoption of VR and continued to undermine user experiences.

Being frustrated with the lack of any suitable cybersickness mitigation methods, we started brainstorming and researching a variety of potential solutions to cybersickness (after reviewing a lot of the literature and our own experiences). In 2019, we came up with the basic idea of HyperJumping (i.e., to combine continuous locomotion at sub-cybersickness thresholds with automatically triggered intermittent jumps, or short teleports.

Our main motivation was to try and create a seamless interface that doesn’t require the user to think about the interface or what to do to prevent getting sick but instead automatically prevents cybersickness.

This was, of course, not an easy problem to solve, and indeed VR researchers and developers have worked for decades on this — so our approach clearly builds on this great foundation of research from our colleagues.

In particular, we wanted to combine the advantages of continuous locomotion, which is essential for supporting low-cognitive-load spatial orientation, and natural and embodied sensation of self-motion (“vection”) as well as predictability/controllability with the advantages of teleporting. This avoids higher optic flow speeds and accelerations, thus reducing the intersensory cue conflict and cybersickness.

After many iterations initially on paper and later using various VR prototypes and pilot tests, and supported by copious amounts of coffee and occasional drinks, we gradually improved on our vision and were increasingly excited that we were eventually able to fly through large virtual environments — in particular, a highly detailed virtual model of Vancouver where most of us live — at breathtaking speeds for hours without getting cybersick.

SIGGRAPH: What was your favorite aspect of creating this project?

BR: During the early parts of the project, I especially enjoyed the creative ideations and brainstorming with Markus and our mental simulations of how the user experience might be for various ideas we explored and tried to imagine. We purposefully tried not to sit in front of a computer or have VR equipment around to not restrict our ideation.

In the months leading up to the SIGGRAPH submission, I particularly enjoyed the regular meeting with David (that often went way into the night) where we rapidly hypothesized what we should do (and what should or should not work) and implemented simple prototypes in Unity to test our design hypotheses, often testing a whole bunch of design hypotheses in one long session.

SIGGRAPH: What specific challenges came about during the making of this VR experience?

BR: One of the key challenges that came about during the making of HyperJump was finding the right balance between empowering users and giving them as much agency as possible (e.g., letting them fly as fast as they wanted), while at the same time ensuring that the system prevents them from getting sick. Another challenge was how to enable users to fly (or drive) at faster speeds while still being able to control and maneuver intuitively and without running into obstacles or flying through buildings. After many iterations we settled on a visualization of both a continuous path prediction and an indication of the next jump location and point in time, supported by audio cues — jumping to the beat of the music.

SIGGRAPH: Do you anticipate more motion-friendly VR to be used in the future?

BR: We hope that our HyperJump approach, together with the great research and ideas of our colleagues, will inspire VR designers to build on these approaches and create VR experiences that allow users to benefit from the potential of VR without having to learn complex or unnatural locomotion methods and without having to worry about getting cybersick.

SIGGRAPH: What do you hope participants take away from their experience as they interact with your project this August?

BR: We hope that participants will enjoy the unique opportunity to not just physically be in the Vancouver Convention Centre (SIGGRAPH’s location) but also do things they could never do in real life — fly through an impressively detailed replica (“digital twin”) of Vancouver, alone or together with other participants, as slowly or quickly as they’d like, without getting cybersick.

Additionally, we hope to reach people who already had negative traveling experiences in VR and provide them a better and cybersickness-free but still engaging experience this time.

Participants will be in full control of their locomotion and, when using the leaning-based interface, their hands are freed up so they can be naturally used for other interactions or communication, just as if one is walking (where legs are used for locomotion, but hands are free for other tasks).

SIGGRAPH: What do you find most exciting about the final product you will be presenting to the SIGGRAPH 2022 community?

BR: We are excited to present a system that comes at no additional cost and can be easily integrated into all kinds of VR and immersive gaming applications, yet from all our testing with a large range of diverse audiences seems to be able to effectively prevent cybersickness — we’re currently running a more formal user study to corroborate those observations — while at the same time allowing audiences to have an embodied and intuitive experience of flying. And when using our leaning-based version, all that without having to hold or worry about a controller, pressing any buttons, or deflecting the joystick.

Personally, I’m thrilled to have co-created a VR experience that conveys many of the aspects I appreciate about (lucid or regular) dream flying: I’m in full control, unmediated, and empowered to fly in any direction and speed I want, just by thinking about it (and moving a bit in the direction I’d like to go).

SIGGRAPH: As a Vancouver-based experience, what advice — or travel tips — do you have for someone looking to attend SIGGRAPH 2022 in person?

BR: Well, in our experience you can quickly explore different parts of Vancouver in VR. Some of these places you might already have seen in real Vancouver, and others might give you inspiration of where to go in Vancouver.

For sightseeing tips, just walking westward from the convention center along the waterfront is quite enjoyable and can be extended to a longer trip around Stanley Park.

Experience “HyperJumping in Virtual Vancouver” and other outstanding immersive experiences at SIGGRAPH 2022! Register now to attend in person in Vancouver, or join us virtually or in a hybrid capacity.

Bernhard Riecke is a full professor at the School of Interactive Arts and Technology at Simon Fraser University (SFU) where he leads the iSpace Lab (an acronym for immersive Spatial Perception Action/Art Cognition and Embodiment). He joined SFU in 2008, after researching for a decade in the Virtual Reality Group of the Max Planck Institute for Biological Cybernetics in Tübingen, Germany, and working as a post-doctoral researcher at the Max Planck Institute, Vanderbilt University, and UC Santa Barbara. His research approach combines fundamental scientific research with an applied perspective of improving human-computer interaction. He is particularly excited to push the envelope and research in areas including human spatial cognition/orientation/updating/navigation; enabling robust and effortless spatial orientation in VR and telepresence; self-motion perception, illusions (“vection”), interfaces, and simulation; and designing for transformative positive experiences using VR (which he touches on in his TEDx Talk “Could Virtual Reality Make Us More Human?”).

David Clement is currently an independent researcher working at the intersection of cognitive science, machine learning, simulation, and computer graphics. He has over 40 years of experience in applied technology applications including real-time content generation, large-scale simulation, information sharing and control applications, and many computer graphics-based solutions.

Recent collaborations include: SFU, Human Studio, and GeoSim.

He has also co-founded many companies including Aizen Experiences, Wavesine Solutions, and Senbionic.

Daniel Zielasko is currently a postdoctoral researcher and lecturer at the University of Trier’s HCI group. He received his doctoral degree in 2020 at the Virtual Reality and Immersive Visualization group at RWTH Aachen University for studying desk-centered virtual reality. He has worked together with neuroscientists, psychologists, medical technicians, and archaeologists on projects such as the EU flagship project HBP (Human Brain Project) and the SMHB (Supercomputing and Modeling for the Human Brain). He received his master’s degree in computer science in 2013 at RWTH Aachen University for studying correction mechanisms for optical tracked anatomical joints. Today, he is working on the integration of VR technologies and methods into everyday life, such as existing professional workflows and entertainment. He does have a special interest in the mitigation of cybersickness and the design of convincing and innovative 3D user interfaces.

Denise Quesnel is pursuing her PhD at Simon Fraser University’s School of Interactive Arts and Technology, with the iSpace Lab. She researches how virtual and extended realities (VR/XR) can alleviate the social and emotional distress associated with chronic physical conditions and illness and utilize a strengths-based approach for positive functioning. She is interested in contributing to interdisciplinary translational research with the fields of experience design, computer science, psychology, and clinical health.

Ashu Adhikari has a master’s degree from the School of Interactive Arts and Technology (SIAT), Simon Fraser University (SFU). As an MSc student, he joined the iSpace team and has collaborated with the team ever since. He pursues interests in embodied interfaces in virtual reality.