The first three "nodes" of the conspiracy-theory network known as QAnon arose in 2018 in the persons of founders Tracy Diaz, Paul Furber and Coleman Rogers. They had figured out how to profit from promoting the posts of "Q," a mysterious figure claiming to have inside information on a mass arrest, undertaken with the blessing of Donald Trump, that nabbed Hillary Clinton and others for running a pedophile ring. They interpreted, analyzed and amplified Q's cryptic ramblings of a massive Satanic child-sex-trafficking, blood-drinking cult run by prominent Democrats, among other dubious stories, on YouTube, Reddit, Facebook, Instagram, 8chan and other social media outlets. Over several years, they racked up hundreds of millions of "follows," "likes," and "shares"—each connection extending their reach outward, like spokes in a wheel, to new followers, each of whom became another node in the network.

QAnon is now a firmly entrenched and quickly growing force of disruption in the American information landscape. President Trump, perhaps QAnon's most influential promoter, had as of August retweeted or mentioned 129 different Twitter accounts associated with QAnon, according to the research group Media Matters for America, a left-leaning non-profit that aims to be a watchdog for conservative media. QAnon distributes conspiracy theories and other forms of disinformation and foments violence. In the "Pizzagate" episode in 2016, a man burst into Cosmic Pizza in Washington, D.C., firing an AR-15 assault rifle, to rescue child sex-slaves (only to find people eating pizza). QAnon believers have committed at least two murders and a child kidnapping, set one California wildfire ablaze, blocked a bridge by the Hoover Dam, occupied a cement plant in Tucson, Arizona, and plotted to assassinate Joe Biden. One man now facing charges for plotting the kidnapping of Michigan governor Gretchen Whitmer posted QAnon conspiracy theories on his Facebook page.

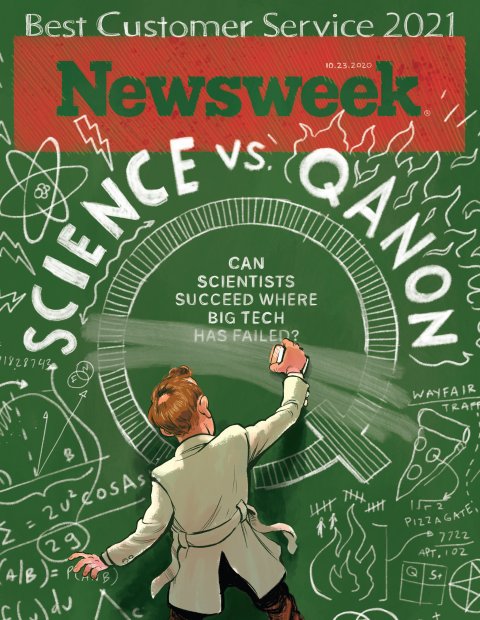

The tech industry's efforts to contain QAnon have failed to slow its spread. The FBI declared QAnon a terrorist organization in May, 2019, even while Facebook remained one of the network's principal enablers. The company's own internal investigation, the results of which were leaked in August, identified more than three million members and followers on its platform, though the true number might be substantially higher. Yet it took until October 6 for Facebook to ban all QAnon groups and pages, as well as QAnon-associated accounts from Facebook-owned Instagram. What's more, the ban doesn't affect individual Facebook profiles that traffic in QAnon-related posts, a gaping loophole certain to leave the platform wide open to QAnon misinformation.

Twitter claims to have suspended 7,000 or so QAnon-associated accounts by invoking violations of standard rules such as distributing spam. These measures, says a spokesperson, "have reduced impressions on QAnon-related tweets by more than 50 percent."

The moves may be too little too late. "The technology has generally done more to help those who purvey this misinformation than those trying to defend against it," says Travis Trammell, an active-duty Army lieutenant colonel who earlier this year received a science and engineering doctorate from Stanford. "I can't think of anything that has had such nefariously disruptive impact on the United States."

Neil Johnson, a George Washington University physicist, agrees. "This is a problem that's bigger than the individuals in these communities, and bigger than any effort of a platform to control it," he says. "It's a huge challenge, and it absolutely requires new science to deal with it."

Johnson and Trammel are part of a cadre of scientists who are at the forefront of efforts to map QAnon and understand how it works. The explosion of disinformation that has upended American life and now threatens its democratic institutions has given rise to a new branch of science called "infodemiology." Inspired by epidemiology, the study of how diseases spread through a population, infodemiology seeks to understand how misinformation and conspiracy theories spread like a disease through a free-wheeling democracy like America's, with the ultimate goal of understanding how to stem its spread.

If Big Tech can't stop QAnon, perhaps scientists can.

A Map of the Battlefield

Nobody knows how big QAnon really is—or, in the parlance of network analysts, how many nodes and "edges," or connections between nodes, the network encompasses. Diaz alone has a 353,000 followers and subscribers on Twitter and YouTube and generates tens of millions of edges a month throughout the "multiverse" of social-media platforms that QAnon uses. David Hayes, former paramedic and now full-time QAnon promoter, has a combined 800,000 followers and subscribers. Seventy candidates for Congress are QAnon nodes. More than 93,000 Twitter users mention QAnon in their profiles, and many times that number spread its messages on the platform. The network's most influential node, of course, is President Trump.

As a starting point, scientists are assembling data about QAnon and effective ways to analyze it. One promising tool are data visualizations compiled by researchers like Erin Gallagher, an independent social-media expert. Almost from QAnon's earliest days, Gallagher has been training an array of software tools on members' activity on Facebook and Twitter, helping to illuminate the group's online anatomy and uncovering a variety of insights. "It's hard to fight a battle if you can't look at a map of the battlefield," she says.

Gallagher means that almost literally. Her specialty is producing QAnon "network maps," visual representations of which social-media accounts are spreading the misinformation, and where it's flowing. That's where nodes and edges come in, providing a means of visually representing the spread of bad information among online accounts. Fed by software that can "scrape" Twitter and Facebook for data on public posts and Tweets (it can't get at private posts and messages) the maps appear to the uninitiated like swirling works of colorful abstract art. But to the trained eye they are an at-a-glance guide to the key sources of disinformation and their followers.

The results have helped Gallagher and her collaborators uncover a number of characteristics of QAnon. One surprising insight: QAnon is unusually decentralized, with new ideas and conversations constantly springing up throughout the membership, without much boost from "bots," or software masquerading as social-media accounts that pump out misinformation.

Another insight: While the pedophilia and human-trafficking claims remain the heart of the QAnon fantasy, the movement is drawing a lot of its members from the antivax movement, and more recently from "plandemic" followers, who believe "elites" plotted to cause the current pandemic. "A big theme among people who go down these rabbit holes is they're inclined to mistrust authorities," says Gallagher. QAnon seems to make room for all of them, she adds.

Plenty of other tools are springing up to help in the battle. A search engine of sorts called "Hoaxy" spots new assertions from low-credibility sources that seem to be catching on, and tracks their spread. It also allows anyone to root out dubious claims in their own Twitter feeds. "We can look at the trending of new narratives in real time," says Filippo Menczer, a computer science professor at Indiana University, where he directs the school's Observatory on Social Media (OSoMe). Menczer and his OSoMe colleagues are also tracking QAnon and other conspiracy and misinformation-oriented communities; they're developing a series of software tools and apps available to help anyone gather data on these groups.

Menczer has used the data from these tools to put together an anatomy of a typical popular QAnon post or Tweet. It's usually tied to a current controversial news topic, such as protester violence, or mask-wearing; it throws in an element of truth; it's framed around a claim that will make people angry; and it fits the community's existing beliefs and delusions.

For example, QAnon network maps lit up in August with word that 39 missing children had been found in a Georgia trailer, and their kidnappers arrested. Was this a real-life Pizzagate? Was this the start of "the Storm," the move, long-anticipated in the QAnon world, by Trump and his loyalists to smash the deep-state child-sex-trafficking rings? Hundreds of thousands of posts and tweets made this claim. "To people who are believers, these stories sound like they should be true, and it makes them feel they need to mobilize," says Menczer. The truth, however, was somewhat different. A state-wide, two-week effort by Georgia law enforcement recovered all 39 of the missing children. The cases turned out to be mostly unrelated to one another.

The obvious solution to the spread of QAnon is to take down accounts that spread its nonsense and other potentially dangerous misinformation—in other words, removing the network nodes. But that's not happening, says Menczer, as witness Facebook's unwillingness to remove QAnon-related profiles in addition to a ban on groups and pages, which experts expect will have limited effect. Facebook and most other platforms remain slow to remove someone for sharing untrue, delusional or even dangerous information. Labeling posts as such doesn't help, because believers see such censorship as part of the conspiracy. (Facebook did not respond to a request for comment.)

A more feasible approach might be cutting down the network edges, or connections, says Menczer. To do that, Facebook, Twitter and other social-media platforms could add "friction" to the sharing of posts, so that conspiracy thinking doesn't spread as quickly or widely, giving people room to hear more grounded opinions and think things through more clearly instead of reacting from the gut.

The Facebook ban on groups and pages takes a step in that direction, by depriving members of some of their established channels for sharing on the platform. But it leaves them free to find others, which is exactly what happened when Facebook enacted a narrower ban in August against 3,000 specific QAnon groups and pages. A potentially more effective way to add friction would be to get the platforms to hide "engagement metrics," that prominently displayed tally of likes, retweets and reposts and indicate at a glance which posts are most popular. "Seeing those high metrics make people more likely to believe a false narrative, and more likely to re-share it," says Menczer.

Another step to culling the network edges would be getting the platforms to dial back their mechanisms for calling users' attention to accounts and posts that match their interests. Those pointers are helpful for those who like to swap posts or tweets on cats, French cooking or parenting, but it leaves those who click on QAnon propaganda ever more deeply steeped in the community and less exposed to more sensible mainstream thinking that might be protective. "Groups like QAnon weaponize the platforms into tools for helping people vulnerable to conspiracy theories to find each other," says Menczer. "The more they share, the more these ideas seem credible to them."

The Pleasure Hit

Countering the pull of QAnon requires understanding the nature of that pull, contends Jennifer Kavanagh, a political science professor at the Pardee RAND graduate school in Santa Monica, Calif., and director of RAND's Strategy, Doctrine and Resources Program. In other words, what do QAnon members get out of joining up?

One thing they get is dopamine, the brain's pleasure chemical. Brain studies have shown that when people see information that confirms their belief in something that isn't true, they get a large dopamine hit. QAnon also provides a simple way to look at an otherwise baffling world and confers ready acceptance into a community. "Conspiracy theories make sense of the world," says Kavanagh. "And they provide that feeling of belonging to a group just by believing what the group believes."

To amplify those benefits, QAnon traffics in claims that tend to evoke strong emotional reactions in anyone who's ready to believe them. Enslaving children, pedophilia, bizarre anti-Christian rituals and a vast hidden empire of rich and powerful people pulling the strings—these are crimes and threats that strike deeply in minds that are open to accepting them as facts.

Understanding how rewarding and resonant QAnon's wild-sounding assertions can be to adherents makes it clear that it's a lost cause to combat the movement's spread with facts. Facts don't replace the powerful feelings and sense of community that conspiracy-theories provide. Instead, a better strategy is to offer alternative narratives that can likewise deliver emotional benefits—but that are woven around the truth instead of delusion and push people to behave in productive, benign ways instead of lashing out with hate, disruption and violence.

Scientists are trying to come up with truth-based, emotionally resonant narratives to combat delusion and misinformation, but they have a long way to go, says Kavanagh. Even a winning narrative will be a tough sell if it comes from scientists and other establishment types that QAnon adherents have come to mistrust. Instead, the narrative must come from "trusted messengers who think like they do"—perhaps a local cop or member of the clergy—"whom they see as an authoritative source," she says.

Sherry Pagoto, a psychologist and social-media expert at the University of Connecticut, has been developing solutions built around those very strategies of resonant messages delivered by trusted messengers. She hasn't tackled QAnon head on, but instead has focused her lab on winning over those who have been taken in by health misinformation—a group that has some overlap with QAnoners, given the latter's ties to the antivax and COVID-is-a-hoax communities.

In one study, Pagoto and her colleagues zeroed in on mothers who allow their teenage daughters to go to tanning salons in states where parental permission is required. Public-health pleas to keep children out of those salons due to skin-cancer risks have largely been ignored. But Pagoto's team took a roundabout route to getting the message across. They set up a Facebook group for mothers of teenage daughters, making it a forum for swapping all kinds of advice, complaints and support. "Instead of trying to give them health information they probably weren't ready to hear," says Pagoto, "we created a community that was relevant to their lives."

Her team had also researched how different types of online messages evoke different feelings, looking for clues as to what messages make people feel better instead of angrier or more upset. Armed with those insights, Pagoto's group started working in conversations about tanning salons, eventually salting the chatter with references to the health risks, while keeping the tone upbeat. Sure enough, some of the mothers ended up changing their minds about permitting their daughters to hit the salons.

Getting through to QAnoners and other victims of disinformation and conspiracy theories is a much bigger challenge, concedes Pagoto. But she insists the same basic approach ought to work. "If we can better understand what shapes their beliefs, we can do a better job of getting the right messages into their communities," she says. That might involve, for example, recruiting people to reach out to and engage with QAnon members in a friendlier way, gently nudging them in questioning the movements claims.

Superspreaders and Quarantines

Loose similarities between the spread of QAnon belief and the spread of infectious disease are the inspiration behind Trammell's approach to curbing the movement. In his research at Stanford, he borrowed mathematical models from epidemiologists to calculate how different conditions speed or slow the rate of infection spread in a population.

Trammell's work has shown, for example, that QAnon has "superspreaders"—people who connect widely online, pulling in large numbers of converts. But online superspreaders have much more reach than their real-world disease counterparts because they don't have to limit their infectiousness to those they're physically near. As with COVID-19 and other infectious diseases, quarantines can help, according to his research. As a result, Trammell proposes that social-media platforms consider "quarantining," or walling off, some of the most active parts of QAnon and other conspiracy-theory communities, so that quarantined members can continue to communicate with each other but can't reach outside to "infect" the non-quarantined.

Preventive measures may be more effective than trying to "cure" those who have already succumbed to conspiracy theories. "Once the ideas take hold in someone, extracting them is extremely difficult," says Trammell. Instead, he advocates for aiming the strongest efforts at non-QAnoners, so that those who are more vulnerable to conspiracy thinking can avoid infection if exposed. For example, social-media platforms could broadly issue warnings about certain false narratives that are pulling people in—a technique he calls "prebunking" conspiracy theories, and which is roughly analogous to vaccination.

As with real vaccines, the prebunking messaging must be carefully designed and tested to avoid side effects. One of those potential side-effects is that when people keep hearing cautions about bad information, they can become as skeptical of legitimate, factual assertions from trustworthy sources as they are of conspiracy theorists.

Whatever strategies are adopted to combat QAnon are adopted, they're bound to fail if they're limited to only a few of the major social-media platforms. That's what George Washington University physicist Johnson worries about. Johnson's specialty is unraveling the mechanisms behind complex, chaotic systems, a technique he has applied to such daunting phenomena as superconductivity and electrical patterns in the brain. But in recent years he has been more focused on unraveling the hidden patterns in the online spread of QAnon and other extremist movements, analyzing them in terms of physics concepts such as multiverses, phase transitions and shock waves. "The complexity of these communities makes them difficult to control," he says. "It's their key advantage."

That complexity stems in part from the many online platforms that QAnon and other conspiracy-theory and terrorist groups use. QAnon activity has blossomed at different times across Reddit, 4chan, 8chan, 8kun, Twitter, Instagram, Facebook and many other outlets. That creates what he calls a multiverse of QAnon followers, with each platform's community developing its own followers and patterns of behaviors. Even worse, each community changes over time, and members hop between them, sometimes individually and sometimes en masse, defying any one platform's efforts to rein them in. "Like cars trying to get around traffic, members switch platforms to get around new restrictions and moderators, taking followers with them," says Johnson. That's exactly why Facebook's ban on groups and pages is likely to be little more than a bump on the road to ever-wider streams of QAnon misinformation.

Because QAnon is so decentralized, going after individual members is "like trying to find the molecule in a pot of water that's made the water boil," Johnson says. What matters is the behavior of the collective, which is a strength of QAnon and other conspiracy-theory movements. But it's a strength that could be turned into a line of attack. When water is close to boiling, small bubbles form along the sides and bottom of a pot. By breaking up those bubbles—perhaps a hundred to a pot—it's possible to prevent water from boiling. Likewise, an effective strategy to contain QAnon might be to target specific small clusters of QAnon members whose behaviors are closely influencing one another, and whose activity threatens to trigger potentially more dangerous behavior in the larger community.

How exactly to break up QAnon "bubbles" is a subject of Johnson's current research. QAnon members in a cluster tend to focus in on their shared attitudes toward certain things they think are happening the world, whether it's particular elements of a conspiracy theory, or certain recent news events. At the same time, they tend to ignore topics they may disagree on, be it religion, politics, health, or others. That may create an opportunity to drive a wedge between these members. "If we can call attention to the points of possible disagreement, we may be able to pull them apart so that their behavior becomes less correlated," says Johnson. "It's basically the opposite of conflict resolution."

Other research suggests that if you change the opinions of a third of a community, the rest of the community is likely to follow. By sowing discord in the right clusters of members, it might be possible to bring QAnon down.

An Ongoing Challenge

No scientist claims to yet have a ready-to-go solution to the problem of QAnon and other troubling on-line communities. Part of the reason progress is slower than it should be is that scientists can't get all the data they need to fully analyze the online behavior of these communities—because the platforms refuse to share it all. "We've been very unsatisfied so far with some of the platforms holding back data," says Menczer. "It's a very large puzzle, and we're not being given access to all the pieces."

Most scientists point straight at Facebook as being the biggest offender when it comes to restricting access to data. But some note that the company has been moving in the direction of providing more data, if slowly, and some platforms, like Twitter, have been relatively forthcoming. Twitter says it has been setting up new tools specifically aimed at helping academic researchers gather and analyze data on the platform's traffic.

Even with better data, the challenge will remain steep, because scientists are aiming at a moving target. "Online behavior changes faster than the usual speed at which scientists understand things, and then policy-makers can pass a law," says Menczer. "The things we've figured out so far are only likely to have small effects."

The experience of COVID-19, and the misinformation around it, has been a disappointment to the infodemiologists. "We had hoped COVID-19 would help people have a better appreciation of how facts matter," says Kavanagh. "But we've only seen conspiracy-theory thinking continue to get worse. And I don't think we've hit bottom yet."

The infodemiologists may have to follow the lead of their epidemiologist siblings and set modest, achievable goals. "Epidemiologists usually know they can't eliminate a disease," says Trammell. "But they look for ways to slow the spread to sustainable levels. We should be able to get to a manageable level of disinformation."

"A manageable level of disinformation" is starting to sound pretty good.

This story was updated on November 25, 7:23 a.m. ET, to include the political leaning and aim of the non-profit research group Media Matters for America.