Abstract

The field of implementation science has made notable strides to enhance the practice of tailoring through the development of implementation strategy taxonomies (e.g., Expert Recommendations for Implementation Change; Waltz et al., In Implement Sci 10:109, 2015) and numerous tailoring methodologies (e.g., concept mapping, conjoint analysis, group model building, and intervention mapping; Powell et al., In J Behav Health Serv Res 44:177–194, 2017). However, there is growing concern about a widening gap between implementation science research and what is practical in real-world settings, given resource and time constraints (Beidas et al., In Implement Sci 17:55, 2022; Lewis et al., In Implement Sci 13:68, 2018). Overly complex implementation strategies and misalignment with practitioner priorities threaten progress in the field of implementation science. As solutions to the burgeoning threats, implementation science thought leaders have suggested using rapid approaches to contextual inquiry; developing practical approaches to implementation strategy design, selection, and tailoring; and embracing an embedded implementation science researcher model that prioritizes partner needs over researcher interests (Beidas et al., In Implement Sci 17:55, 2022). Aligned with these recommendations, we introduce the Activity Readiness Tool (ART)—a brief, practitioner-friendly survey that assesses discrete determinants of practice through an implementation readiness lens. We illustrate how the tool can be used as a rapid approach to facilitate implementation efforts in a case example involving a national integrated care initiative. The ART can serve as a quick, user-friendly companion to an array of existing evidence-based tailoring methods and tools.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Intervention tailoring involves the process of prospectively identifying determinants of practice (i.e., barriers, facilitators) in a specific setting and utilizing strategies to improve implementation (Baker et al., 2015). Intervention tailoring has long been assumed to be essential for effective implementation and for scaling evidence-based practices (EBPs) toward improving population health and health equity. Yet, the most recent Cochrane systematic review of tailored interventions indicates a small-to-moderate effect of tailored interventions (Baker et al., 2015). The review underscored the need for more robust research and understanding about the practice of tailoring. Specifically, the authors concluded, “it is not yet clear how best to tailor interventions and therefore not clear what the effect of an optimally tailored intervention would be” (Baker et al., 2015, p. 2). The ability to draw meaningful insights about the effectiveness of tailoring strategies remains elusive in the absence of well-documented, detailed descriptions of tailoring practices. In fact, the need for concrete examples to illuminate the “black box” (Powell et al., 2017) of tailoring methods has been increasingly noted by implementation researchers and practitioners (Lewis & Myhra, 2017; Powell et al., 2017; Valenta et al., 2023).

To enhance the practice of tailoring, the field of implementation science has made notable strides through the development of taxonomies of implementation strategies (e.g., Expert Recommendations for Implementation Change (ERIC); Waltz et al., 2015) and numerous tailoring methodologies (e.g., concept mapping (Kwok et al., 2020), conjoint analysis (Farley et al., 2013; Lewis et al., 2018; Shrestha et al., 2018), group model building (Calancie et al., 2022; Swierad et al., 2020), and intervention mapping (Fernandez et al., 2019; Powell et al., 2017)). However, there is concurrent apprehension about a widening gap between implementation science research and what is practical in real-world settings, given resource and time constraints (Beidas et al., 2022; Lewis et al., 2018; Lyon et al., 2020). For instance, conjoint analysis is recognized as a strong methodological approach for collaboratively ranking implementation barriers and strategies based on feasibility and importance, but it is time, skill, and personnel intensive (Lewis et al., 2018). In a pre-mortem assessment of the implementation science field, Beidas et al. (2022) warn that “overly complex implementation strategies and approaches [and an] inability to align timelines and priorities with partners” (p.1) can undermine the value of the field. Offering a few solutions to these burgeoning threats, Beidas et al. (2022) call for practitioners to embrace implementation models that elevate pragmatic approaches to implementation strategy design, selection, and tailoring and to elevate partner priorities over researcher interests. In a similar fashion, implementation science thought leaders like Robinson and Damschroder (2023) and Stanick et al. (2019) recognize the need for easy-to-use implementation science tools. More specifically, Robinson and Damschroder (2023) note the need for quantitative assessments that efficiently identify determinants of practice.

While there are a variety of tools intended to guide implementation science practitioners in the way of feasible best practices, these strategies have been critiqued for being too “general” thus elucidating a gap in the literature related to the discrete activities necessary for tailored strategies to be successful (Engell et al., 2023; Howell et al., 2022; Miake-Lye et al., 2020). As an illustration, one of ERIC’s strategies is “form a coalition” (Waltz et al., 2015); in practice, this strategy requires a series of many discrete implementation activities (e.g., identify and recruit coalition members, develop shared goals and governance, and establish an infrastructure for collaboration). Implementation activities refer to the key elements of an intervention that have a measurable output. Beyond a single strategy, most program interventions are complex to implement because they involve multiple dynamic and interacting components or activities (Craig et al., 2008; McHugh et al., 2022). For example, in a healthcare setting, something as seemingly simple as implementing a depression screener includes multiple discrete activities, e.g., buy-in from healthcare team members, providers knowing how to administer it, informatic staff updating the electronic health record to chart scores, and auditing to ensure proper data entry. In addition, each discrete activity is embedded within a unique healthcare setting. Thus, a data-informed understanding of the context (e.g., availability of resources, staff motivation, and workflow processes across ecological levels) is critical to successful implementation of each activity (Metz et al., 2023; Rogers, 2008) and therefore to the success of the overall program. With minimal guidance about how to actually implement a best-practice strategy, practitioners can be overwhelmed by the strategy. However, if practitioners are able to identify the specific activities associated with an implementation strategy, then tailoring strategies can be applied at the activity level to better ensure implementation success.

To address the need for practical, activity-level tailoring strategies in the field, we introduce the Activity Readiness Tool (ART)—a brief, practitioner-friendly tool that assesses discrete determinants of practice (activities) through an implementation readiness lens. In this article, we begin with background information about readiness and the ART and then we provide a case example to illustrate how the tool can be used as a rapid approach to facilitate implementation efforts. We propose that the ART can be used on its own or to complement/enhance existing evidence-based tailoring methods and tools.

Readiness for Activity-Level Implementation

Readiness is a well-established implementation science concept. A setting’s readiness for an intervention (evidence-based practice or program) can be assessed at two levels: (1) at the general (global) level and (2) at the activity level. Global readiness refers to how ready stakeholders are for an intervention as a whole. Activity readiness refers to how ready stakeholders are for specific key activities involved in implementing the intervention. While the importance of attending to the activity level is recognized in implementation literature, little documented attention has been paid to the readiness of a setting for activity-level implementation. The implementation of interventions can be tailored for optimal results when discrete activities are assessed for readiness. R = MC2, or Readiness = Motivation × General Capacity x Innovation-Specific Capacity, is a readiness heuristic that reflects implementation barriers and facilitators across three components: motivation, general capacity, and innovation-specific capacity (Scaccia et al., 2015). The components and subcomponents of R = MC2 are presented in Table 1. R = MC2 is the foundation for the ART.

The reasons for integrating readiness and tailoring, as we have done with the ART, can be summarized through the following premises: Readiness is a critical success factor of implementation effectiveness (Drzensky et al., 2012; Holt & Vardaman, 2013). The components and subcomponents of readiness mirror the determinants of practice (i.e., barriers and facilitators of implementation; Baker et al., 2015; Scaccia et al., 2015; Scott et al., 2017). Further, the assessment of implementation readiness is complementary with improvement approaches (e.g., strategy selection and use) involved in tailoring (Baldwin et al., 2022; Domlyn et al., 2021; Kenworthy et al., 2022; Wichmann et al., 2020).

About the ART

What is the ART?

The Activity Readiness Tool (ART) is a readiness-focused, practitioner-friendly tool for activity-level tailoring. The customizable tool contains 14 items and requires less than 10 min to complete. The ART is administered to individuals involved in the targeted activity. It is suitable for use prior to and during implementation, as tailoring can occur both prior to implementation and iteratively to maximize the likelihood of implementation success at the activity level (McHugh et al., 2022). Additionally, the ART can be used independently or can complement other general implementation tailoring assessments.

The actual customization of the ART involves adapting each of the 14 assessment items to the particular implementation activity by inserting text that references the activity for each item (see section titled The ART in Action for an example of how we customized the ART). We recommend including input from individuals who are involved in intervention implementation when adapting the ART to a particular activity. Stakeholder involvement aids appropriate activity identification and selection.

Why and How the ART was Developed

The ART is rooted in the proposition that examining implementation readiness at more discrete levels of implementation (i.e., key activities that make up an intervention) can shed light on mechanisms of change (barriers, facilitators) that might otherwise be overlooked, but which are crucial to achieve quality implementation and targeted program outcomes. Our development of the ART emerged from the integration of organizational readiness research and practice-based learning. In implementing evidence-based practices (EBPs) across diverse settings and sectors, we observed (a) most policies, practices, programs, and processes—even those of modest scale—are composed of multiple discrete activities; (b) readiness for each activity is important to implementation effectiveness; and (c) readiness for one particular activity does not automatically translate to readiness for other activities within the same intervention. Additionally, we observed that attending to readiness for these discrete activities was critical to tailoring implementation supports and ensuring implementation quality.

We utilized two components of the R = MC2 readiness heuristic to develop the ART: Motivation and Innovation-Specific Capacity. Motivation measures the willingness of practitioners to implement a particular activity. Innovation-Specific Capacity measures the ability of practitioners (e.g., knowledge, skills, resources) to engage in the target implementation activity. Item development for each of these readiness components was informed by the Readiness Diagnostic Scale (RDS)–a R = MC2 measurement scale for assessing global readiness with broad applicability across contexts (e.g., military, public health, and healthcare; Domlyn et al., 2021; Livet et al., 2020; Scott et al., 2017, 2021). The third component of the R = MC2 readiness heuristic (i.e., General Capacity) was not incorporated into the ART, given the tool’s focus on implementation at the activity level.

The ART in Action

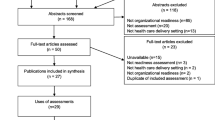

As interest in intervention tailoring is growing in healthcare settings (Movsisyan et al., 2019; Valenta et al., 2023), we illustrate the use of the ART in a healthcare clinic through the Integrated Care Leadership Program (ICLP). Our example pairs the ART with the use of a RDS and the Plan-Do-Study-Act (PDSA) quality improvement approach to tailor activity-level implementation and build implementation readiness (see Fig. 1). PDSA is a systematic approach to guide users through tests of change that involves developing a plan (Plan), carrying out the test of change (Do), learning from that test (Study), and modifying the test as needed for the next cycle (Act; Langley et al., 2009). The global intervention in this case example is integrated behavioral health and primary care (i.e., integrated care). The activity-level focus is the completion of a Patient Health Questionnaire (PHQ) training pertaining to the administration of two versions of the depression screener (PHQ-2 and PHQ-9). We used ART to assess the readiness of healthcare providers to complete the PHQ training, which was a discrete activity in the ICLP.

The Activity Readiness Tool (ART) for implementation tailoring and building implementation readiness. Note As an activity-level assessment instrument, the ART can be used in conjunction with a general readiness assessment (e.g., Readiness Diagnostic Scale; RDS) and the Plan-Do-Study-Act (PDSA) approach. This figure depicts administration of the ART at two PDSA stages: (i) prior to implementation to inform planning and (ii) during implementation to monitor and continuously tailor implementation strategies to the needs of the context

Setting: The Integrated Care Leadership Program

Launched by the Satcher Health Leadership Institute at Morehouse School of Medicine (SHLI/MSM), the Integrated Care Leadership Program (ICLP) was designed to enhance mental health equity through the promotion of integrated care. The goals of the ICLP were to (1) build capacity within clinical sites to integrate behavioral health and primary care; (2) assess and build readiness for integrated care at participating healthcare practices via education, implementation supports, and technical assistance and feedback; and (3) promote health equity among vulnerable populations by strengthening capacity among providers and clinics to implement and sustain integrated practice. With funding from Kaiser Permanente National Community Benefit and the Robert Wood Johnson Foundation, a multisite partnership was established between the University of South Carolina, the University of North Carolina at Charlotte, and SHLI/MSM to monitor setting readiness for the ICLP. The ICLP Intervention Team was led by a psychiatrist and supported by an associate director and two technical assistants. The ICLP Readiness Assessment Team consisted of a director, associate director (both clinical-community psychologists), and two doctoral trainees in clinical-community psychology.

Nineteen healthcare organizations (17 primary care practices and two behavioral health clinics) from 11 states participated in the ICLP. Fourteen practices were in urban areas, four rural, and one in a suburban area. Six practices were federally qualified health centers, four were public non-profits, five were private non-profits, one was for-profit, and three were non-specified. The components of the ICLP learning collaborative included an online curriculum focused on transformative leadership, essentials for practice change and improvement and sustainability, monthly webinars, technical assistance, clinical data analysis, and organizational readiness assessments. Participating sites identified their own goals for the program (e.g., staff trainings, workflow modifications, and implementing or expanding behavioral health screening practices) and conducted monthly PDSAs on those specific goals. The ICLP team visited participating practices, where sites had the unique opportunity to showcase their facilities and receive intensive technical assistance. Selected sites were awarded high-impact innovation grants to support projects aimed at implementing process improvements toward the achievement of integrated care. Program foci included staff training, leadership development, and enhanced depression screening. Fifteen sites who successfully completed the ICLP presented their work at ICLP summits at Morehouse School of Medicine.

Assessing Global Readiness for Integrated Care

During the ICLP, global readiness for integrated care was assessed via the Readiness for Integrated Care Questionnaire (RICQ; Scott et al., 2017). The RICQ is a version of the RDS adapted to integrated care. The RICQ was administered to all participating sites at three time points. The Readiness Assessment Team customized reports summarizing readiness trends for each site after each RICQ assessment. The questionnaire measures healthcare organizational readiness for implementing integrated care through 82 items (Scott et al., 2017). Sample items include “Integrated care fits well with the values of our practice,” which assessed compatibility of the approach, and “We have the knowledge we need to integrate care,” which assessed innovation-specific knowledge. In the ICLP, baseline RICQ data were used to inform the initial PDSA cycle, which involved identifying an implementation activity.

Assessing Activity Readiness for Integrated Care

We used the ART to assess clinic readiness for activities associated with implementing integrated care (e.g., mental health screening, electronic medical record development). Clinic staff determined what activities would be assessed by the ART. Thus, the activities assessed differed by clinic. Below is an example of activities identified:

-

Implement the Rapid Assessment for Adolescent Preventive Services (i.e., a risk screening tool)

-

Train faculty and residents about the Patient Health Questionnaire (PHQ) depression screening tool

-

Run reports on the electronic medical record to track patient care trends

-

Design an operational workflow for mental health screening

We used the ART to monitor the barriers and facilitators during implementation of a discrete activity. Each ART was completed independently by a minimum of three clinic staff representing different positions (e.g., faculty physician, medical resident, administrative staff, behavioral health counselor). Responses were averaged to determine activity-level readiness. For each ART administration, the Readiness Assessment Team provided clinics with de-identified reports, which included a summary of trends and stimulus discussion questions to facilitate reflection about the trends. Additionally, a member of the Readiness Assessment Team reviewed the ART reports with each clinic to support data sensemaking and use. The ICLP technical assistants were also available to discuss results and implications for practice improvement.

Application of the ART at Central Primary Care

Central Primary Care (pseudonym) is a healthcare organization that participated in the ICLP. It is a medical clinic and academic center within a larger health system. This clinic began integrated care efforts by implementing a depression screener. Clinic staff (faculty and resident physicians) participated in the ICLP to advance their level of integration. Through the baseline RICQ assessment, Central Primary Care staff identified “innovation-specific knowledge and skills” as an area of lower readiness. The staff reflected that they were motivated to engage in integrated care activities but needed training about integrated care, particularly how to effectively implement the Patient Health Questionnaire (PHQ-2/9). They noted the presence of a “Program Champion” as a strength because one of the ICLP participants and the medical director were highly supportive of integrated care.

Informed by the RICQ trends and existing clinic progress, Central Primary Care staff chose to focus their integrated care efforts on improving implementation of the PHQ depression screener. They utilized the PDSA approach to prepare for improved implementation and selected “train staff about the Patient Health Questionnaire (PHQ-2/9)” for their PDSA activity. After a period of planning and implementing an asynchronous PHQ training, staff completed an ART as part of their PDSA process (during the “Do” stage). The ART was adapted to the specified PDSA implementation activity (i.e., train staff about the PHQ) and used to assess clinic staff readiness for the activity.

As a tool for identifying determinants of practice at the activity level, the ART revealed that sufficient time and resources were not dedicated to the training (Central Primary Care ART data are available in Table 2). Clinic staff independently reviewed their ART clinic report. Then, a facilitated meeting was held by the Readiness Assessment Team to discuss the ART report. During the meeting, physician residents explained that it was difficult to make the training a priority due to the busy nature of their medical setting; the heavy patient schedules competed with setting time aside for completing the PHQ training. The staff discussed possible strategies for overcoming the identified barriers. Ultimately, the clinic staff decided to change their implementation strategy by scheduling the PHQ training during Grand Rounds—a protected time in which residents were routinely available. Staff then proceeded with renewed efforts. After six months, the Readiness Assessment Team re-administered the RICQ to examine changes in clinic readiness for integrated care. Figure 2 summarizes how Central Primary Care staff engaged in the RICQ, PDSA, and ART.

Process for assessing global and activity readiness and use of PDSA at Central Primary Care. Note This figure depicts the use of the ART at Central Primary Care. Selection of the implementation activity was informed by the Readiness for Integrated Care Questionnaire (RICQ). Clinic staff used PDSA to support activity-level implementation. During the “Do” stage of PDSA, the ART was administered which focused on “training staff (faculty and physician residents) about the Patient Health Questionnaire (PHQ).” The RICQ was re-administered at six months from baseline to assess changes in global readiness for integrated care. Increased innovation-specific knowledge and skills was evidenced

Discussion

Given the availability of rigorously developed tailoring frameworks, checklists, and robust change management databases, it would seem that the task of tailoring interventions can be relatively straightforward: first, assess barriers and facilitators to take stock of the situation; next, refer to the evidence base of strategies; and finally, select the one(s) best suited to the implementation context (Fernandez et al., 2022). However, implementation outcomes are still falling short (Baker et al., 2015). While the assortment of implementation resources is highly valuable, there is a growing demand for practical, easy-to-use tools, and rapid methods to adapt and optimize interventions (Glasgow & Chambers, 2012; Glasgow et al., 2014; Robinson & Damschroder, 2023; Stanick et al., 2019; Valenta et al., 2023). Experience with complex interventions points to the common-sense fact that interventions are composed of multiple activities that, when successfully engaged, contribute to the overall implementation effectiveness and intervention outcomes. Omission or neglect at the activity level can impede or compromise implementation of the full intervention and, consequently, targeted intervention outcomes.

In this article, we introduced the ART—a readiness-focused, practitioner-friendly tool for activity-level tailoring that can be used in conjunction with existing implementation approaches and resources. The ART contributes to the burgeoning demand for pragmatic measures of context to support implementation. Reflecting key criteria for pragmatic measures (Glasgow & Riley, 2013; Stanick et al., 2019), the ART is low burden (< 10 min to complete), actionable, stakeholder relevant, and easy to administer.

Implications

The ART and Participant Engagement

Use of the ART during the ICLP initiative reinforced the importance of engagement, specifically thoughtful collaboration among members of the Readiness Assessment Team, implementation technical assistants, and clinic staff. Given the organic evolution of the ART and initiative constraints (e.g., project timeline and clinic staff capacity) our initial applications of the ART involved modest staff input in the tool’s development. Despite the limited staff input, we experienced high staff engagement with completing the ART. This signified the high perceived value of the ART among clinic staff (relevance) and the ease of use (low burden).

We believe stakeholder engagement from early on is critical to implementation effectiveness. We view engagement as involving bi-directional idea exchange and shared decision-making. In future applications of the ART, we encourage a co-design process that involves conversations with organizational stakeholders to shape the ART. Involving organizational stakeholders (e.g., staff, leadership) ensures that the ART is effectively customized to the context. It can enhance measurement validity by ensuring that the written language is appropriate and that items measure what they propose to measure. Furthermore, involving stakeholders from early on can empower participants to engage and drive the implementation process through ensuring that the methods used are appropriate, feasible, and acceptable (Engell et al., 2023; Fernandez et al., 2022; Movsisyan et al., 2019). A combination of factors are reported to be favorable for stakeholder inclusion, including strong joint interprofessional engagement, well-established academic-practice partnership, and sufficient funding support (Albert et al., 2019; Boaz et al., 2018; Raine et al., 2016; Rycroft-Malone et al., 2011; Scott et al., 2019; Valenta et al., 2023). Lewis and colleagues (2018) illustrate a robust process for engaging organizational stakeholders throughout the process of determining implementation barriers and strategies.

The ART and Ongoing Monitoring

Assessing determinants of practice while adjustments are made to implementation importantly enables practitioners to track and understand the impact of tailoring strategies (Durlak & DuPre, 2008; Greenhalgh et al., 2004). Systematic documentation of these processes addresses a persistent gap in the tailoring literature noted in Baker et al.’s (2015) systematic review, namely the lack of reporting on whether barriers were overcome by selected tailoring strategies. The study and documentation of activity-level change mechanisms in particular are needed to advance public understanding of what makes program implementation work (e.g., when, under what conditions, and for whom). Through the ICLP, we discovered that it would be valuable to administer the ART repeatedly (e.g., monthly, quarterly) as a mechanism to monitor determinants of practice. The practice of routine contextual assessment and implementation monitoring is aligned with implementation research and recommendations (Domlyn et al., 2021; Scott et al., 2017; Waltz et al., 2019). The brevity of the ART minimizes response burden, and the simplicity of scoring lends to readily available trend data. In this way, the efficiency of the ART offers advantages at the assessment stage of tailoring that other tailoring approaches do not.

The ART and Other Implementation Tools

In the ICLP, activity selection was guided by data from the baseline RICQ. The activity selection process was group based, involving occupationally diverse staff within each clinic. We observed that pairing these tools (ART, RICQ) and processes (PDSA, collaborative conversation) was complementary and productive. Therefore, we believe the ART can be used in conjunction with other implementation tools, strategies, and approaches in addition to its value as an independent tailoring tool.

From a practical standpoint, organizations are limited in time and resources. Thus, practitioners need to be selective about which activity(s) to assess when using the ART. Activity selection can also be accomplished through a prioritization process. For example, Fernandez et al. (2022) offer a useful prioritization tool which draws on two indicators (importance and changeability). We hypothesize the ART could be used more effectively if Fernandez et al.’s prioritization tool was an intermediary step between RICQ and PDSA to support systematic activity selection. For example, in the ICLP context, stakeholders might identify important implementation activities for integrated care based on results from the RICQ (global readiness assessment) and then use Fernandez et al.’s prioritization tool to systematically select the particular implementation activity to target first. The selected implementation activity would then be customized to the ART and become the PDSA focus.

Limitations & Future Directions

An aim of this article is to illuminate the “black box” of tailoring (Powell et al., 2017) through a detailed account of a real-world use of a readiness-focused tailoring tool. Accordingly, we used a descriptive study design that is process oriented. Studies that report descriptively and transparently about the processes involved in tailoring, including details about timeline and long-term value, are needed to make a business case to funders, policymakers, and organizational leadership for resources that support implementation monitoring and improvement efforts (Boaz et al., 2018; Raine et al., 2016; Valenta et al., 2023). Descriptive studies are also particularly valuable for practitioners engaged in intervention implementation, as they provide an implementation roadmap and valuable field-based insights. A limitation of this descriptive study is that the ART and the RDS are not evaluated in comparison to other existing tools. Per Baker et al.’s (2015) Cochrane review, control study designs remain important to understanding the cost-effectiveness of tailoring and the comparative value of any tailoring approach.

Our case example illustrated one cycle of the ART administration, barring insight into the longitudinal impact of the ART or the tool’s sensitivity to change. The use of a single administration was due to project resource and time constraints, including the availability of technical assistance providers and participating clinic staff. We recommend repeated ART assessments for the purpose of implementation monitoring and tailoring. Proactively assessing the implementation context throughout the intervention lifecycle enables evolving implementation barriers and facilitators to be identified (Waltz et al., 2019).

The main focus of this article is on the use of the ART. We describe the use of the tool in conjunction with the Readiness Diagnostic Scale and PDSA approach. In doing so, this article gives primary attention to the tailoring step of determinant identification and lightly brushes on the steps of determinant prioritization, determinant-strategy matching, and strategy execution. While beyond the scope of this article, the Readiness Building System offers a more comprehensive readiness building and tailoring approach (See Fig. 3; Watson et al., 2022). Additionally, numerous determinant prioritization and strategy selection resources are available (e.g., Fernandez et al., 2022; Powell et al., 2017; Waltz et al., 2015, 2019).

Readiness Building System. Note The Readiness Building System begins with an intentional Initial Engagement stage, whereby the innovation, its goals, and the readiness building process are discussed and mutually determined. Next is the Assessment stage, where readiness components are assessed at the general and discrete levels. The Assessment stage is followed by a review of assessment trends and collaborative discussions involving key organizational stakeholders to prioritize needs and areas for improvement. Organizational stakeholders draw on the Change Management of Readiness (CMOR) approach to identify strategies for addressing prioritized readiness deficiencies. Implementation of CMOR strategies is actively monitored with target outcomes as benchmark indicators of effectiveness

Lastly, the ART is grounded in the R = MC2 readiness heuristic (Scaccia et al., 2015) and draws from a psychometrically validated instrument (Readiness Diagnostic Scale). However, development of the ART was informed by stakeholders and guided by pragmatics rather than by the conventional psychometric paradigm. As the ART evolves, it will be useful to attend to how particular items perform, including whether select items are stronger predictors of readiness across activities. Further, emerging literature is shedding light on deep structural factors that influence implementation (e.g., stakeholder mental models, implementation climate, relationships) and suggesting that tailoring strategies give greater attention to intangible aspects of implementation (Metz et al., 2023). The ART assesses some deep structural and intangible factors including implementation climate, interorganizational and intra-organizational relationships, and perceived relative advantage. The ART might be strengthened by embedding more precise measurements of stakeholders’ mental models and values/principles. This is a prospective future direction.

Conclusion

The ART is a readiness-focused, pragmatic instrument for activity-level tailoring that enables practitioners to examine discrete determinants of practice. Informed by key criteria for pragmatic measures (Glasgow & Riley, 2013; Stanick et al., 2019), this tool is a contribution to the growing demand for practitioner-friendly implementation science strategies and methods. Thus, it is a step toward bridging the widening gap between implementation science research and the practical needs of real-world settings. The brevity of the instrument minimizes response burden, and the activity-level focus offers advantages at the assessment stage of tailoring that other existing tailoring instruments do not. In addition, the ART can serve as a companion to an array of existing evidence-based tailoring methods and tools.

Data availability

N/A.

References

Albert, N. M., Chipps, E., Falkenberg Olson, A. C., Hand, L. L., Harmon, M., Heitschmidt, M. G., Klein, C. J., Lefaiver, C., & Wood, T. (2019). Fostering academic-clinical research partnerships. The Journal of Nursing Administration, 49(5), 234–241. https://doi.org/10.1097/NNA.0000000000000744

Baker, R., Camosso-Stefinovic, J., Gillies, C., Shaw, E. J., Cheater, F., Flottorp, S., Robertson, N., Wensing, M., Fiander, M., Eccles, M. P., Godycki-Cwirko, M., van Lieshout, J., & Jäger, C. (2015). Tailored interventions to address determinants of practice. The Cochrane Database of Systematic Reviews, 2015(4), CD005470. https://doi.org/10.1002/14651858.CD005470.pub3

Baldwin, L.-M., Tuzzio, L., Cole, A. M., Holden, E., Powell, J. A., & Parchman, M. L. (2022). Tailoring implementation strategies for cardiovascular disease risk calculator adoption in primary care clinics. The Journal of the American Board of Family Medicine, 35(6), 1143–1155. https://doi.org/10.3122/jabfm.2022.210449R1

Beidas, R. S., Dorsey, S., Lewis, C. C., Lyon, A. R., Powell, B. J., Purtle, J., Saldana, L., Shelton, R. C., Stirman, S. W., & Lane-Fall, M. B. (2022). Promises and pitfalls in implementation science from the perspective of US-based researchers: Learning from a pre-mortem. Implementation Science: IS, 17(1), 55. https://doi.org/10.1186/s13012-022-01226-3

Boaz, A., Hanney, S., Borst, R., O’Shea, A., & Kok, M. (2018). How to engage stakeholders in research: Design principles to support improvement. Health Research Policy and Systems, 16(1), 60. https://doi.org/10.1186/s12961-018-0337-6

Calancie, L., Fullerton, K., Appel, J. M., Korn, A. R., Hennessy, E., Hovmand, P., & Economos, C. D. (2022). Implementing group model building with the shape up under 5 community committee working to prevent early childhood obesity in Somerville, Massachusetts. Journal of Public Health Management and Practice, 28(1), E43. https://doi.org/10.1097/PHH.0000000000001213

Craig, P., Dieppe, P., Macintyre, S., Michie, S., Nazareth, I., & Petticrew, M. (2008). Developing and evaluating complex interventions: The new Medical Research Council guidance. BMJ, 337, a1655. https://doi.org/10.1136/bmj.a1655

Domlyn, A. M., Scott, V., Livet, M., Lamont, A., Watson, A., Kenworthy, T., Talford, M., Yannayon, M., & Wandersman, A. (2021). R = MC2 readiness building process: A practical approach to support implementation in local, state, and national settings. Journal of Community Psychology, 49(5), 1228–1248. https://doi.org/10.1002/jcop.22531

Drzensky, F., Egold, N., & van Dick, R. (2012). Ready for a change? A longitudinal study of antecedents, consequences and contingencies of readiness for change. Journal of Change Management, 12(1), 95–111. https://doi.org/10.1080/14697017.2011.652377

Durlak, J. A., & DuPre, E. P. (2008). Implementation matters: A review of research on the influence of implementation on program outcomes and the factors affecting implementation. American Journal of Community Psychology, 41(3–4), 327–350. https://doi.org/10.1007/s10464-008-9165-0

Engell, T., Stadnick, N. A., Aarons, G. A., & Barnett, M. L. (2023). Common elements approaches to implementation research and practice: Methods and integration with intervention Science. Global Implementation Research and Applications, 3(1), 1–15. https://doi.org/10.1007/s43477-023-00077-4

Farley, K., Thompson, C., Hanbury, A., & Chambers, D. (2013). Exploring the feasibility of Conjoint Analysis as a tool for prioritizing innovations for implementation. Implementation Science, 8(1), 56. https://doi.org/10.1186/1748-5908-8-56

Fernandez, M. E., Damschroder, L., & Balasubramanian, B. (2022). Understanding barriers and facilitators for implementation across settings. In B. J. Weiner, K. Sherr, & C. C. Lewis (Eds.), Practical implementation science: Moving evidence into action (pp. 97–132). Springer Publishing Company.

Fernandez, M. E., ten Hoor, G. A., van Lieshout, S., Rodriguez, S. A., Beidas, R. S., Parcel, G., Ruiter, R. A. C., Markham, C. M., & Kok, G. (2019). Implementation mapping: Using intervention mapping to develop implementation strategies. Frontiers in Public Health. https://doi.org/10.3389/fpubh.2019.00158

Glasgow, R. E., & Chambers, D. (2012). Developing robust, sustainable, implementation systems using rigorous, rapid and relevant science. Clinical and Translational Science, 5(1), 48–55. https://doi.org/10.1111/j.1752-8062.2011.00383.x

Glasgow, R. E., Phillips, S. M., & Sanchez, M. A. (2014). Implementation science approaches for integrating eHealth research into practice and policy. International Journal of Medical Informatics, 83(7), e1-11. https://doi.org/10.1016/j.ijmedinf.2013.07.002

Glasgow, R. E., & Riley, W. T. (2013). Pragmatic measures: What they are and why we need them. American Journal of Preventive Medicine, 45(2), 237–243. https://doi.org/10.1016/j.amepre.2013.03.010

Greenhalgh, T., Robert, G., Macfarlane, F., Bate, P., & Kyriakidou, O. (2004). Diffusion of innovations in service organizations: Systematic review and recommendations. The Milbank Quarterly, 82(4), 581–629. https://doi.org/10.1111/j.0887-378X.2004.00325.x

Holt, D. T., & Vardaman, J. M. (2013). Toward a comprehensive understanding of readiness for change: The case for an expanded conceptualization. Journal of Change Management, 13(1), 9–18. https://doi.org/10.1080/14697017.2013.768426

Howell, D., Powis, M., Kirkby, R., Amernic, H., Moody, L., Bryant-Lukosius, D., O’Brien, M. A., Rask, S., & Krzyzanowska, M. (2022). Improving the quality of self-management support in ambulatory cancer care: A mixed-method study of organisational and clinician readiness, barriers and enablers for tailoring of implementation strategies to multisites. BMJ Quality & Safety, 31(1), 12–22. https://doi.org/10.1136/bmjqs-2020-012051

Kenworthy, T., Domlyn, A., Scott, V. C., Schwartz, R., & Wandersman, A. (2022). A proactive, systematic approach to building the capacity of technical assistance providers. Health Promotion Practice, 24(3), 546–559. https://doi.org/10.1177/15248399221080096

Kwok, E. Y. L., Moodie, S. T. F., Cunningham, B. J., & Oram Cardy, J. E. (2020). Selecting and tailoring implementation interventions: A concept mapping approach. BMC Health Services Research, 20(1), 385. https://doi.org/10.1186/s12913-020-05270-x

Langley, G. J., Moen, R. D., Nolan, K. M., Nolan, T. W., Norman, C. L., & Provost, L. P. (2009). The improvement guide: A practical approach to enhancing organizational performance. Wiley.

Lewis, C. C., Scott, K., & Marriott, B. R. (2018). A methodology for generating a tailored implementation blueprint: An exemplar from a youth residential setting. Implementation Science, 13(1), 68. https://doi.org/10.1186/s13012-018-0761-6

Lewis, M. E., & Myhra, L. L. (2017). Integrated care with indigenous populations: A systematic review of the literature. American Indian & Alaska Native Mental Health Research: THe Journal of the National Center, 24(3), 88–110. https://doi.org/10.5820/aian.2403.2017.88

Livet, M., Yannayon, M., Richard, C., Sorge, L., & Scanlon, P. (2020). Ready, set, go!: Exploring use of a readiness process to implement pharmacy services. Implementation Science Communications, 1(1), 52. https://doi.org/10.1186/s43058-020-00036-2

Lyon, A. R., Comtois, K. A., Kerns, S. E. U., Landes, S. J., & Lewis, C. C. (2020). Closing the science–practice gap in implementation before it widens. In B. Albers, A. Shlonsky, & R. Mildon (Eds.), Implementation Science 3.0 (pp. 295–313). Springer International Publishing. https://doi.org/10.1007/978-3-030-03874-8_12

McHugh, S. M., Riordan, F., Curran, G. M., Lewis, C. C., Wolfenden, L., Presseau, J., Lengnick-Hall, R., & Powell, B. J. (2022). Conceptual tensions and practical trade-offs in tailoring implementation interventions. Frontiers in Health Services. https://doi.org/10.3389/frhs.2022.974095

Metz, A., Kainz, K., & Boaz, A. (2023). Intervening for sustainable change: Tailoring strategies to align with values and principles of communities. Frontiers in Health Services. https://doi.org/10.3389/frhs.2022.959386

Miake-Lye, I. M., Delevan, D. M., Ganz, D. A., Mittman, B. S., & Finley, E. P. (2020). Unpacking organizational readiness for change: An updated systematic review and content analysis of assessments. BMC Health Services Research, 20(1), 106. https://doi.org/10.1186/s12913-020-4926-z

Movsisyan, A., Arnold, L., Evans, R., Hallingberg, B., Moore, G., O’Cathain, A., Pfadenhauer, L. M., Segrott, J., & Rehfuess, E. (2019). Adapting evidence-informed complex population health interventions for new contexts: A systematic review of guidance. Implementation Science, 14(1), 105. https://doi.org/10.1186/s13012-019-0956-5

Powell, B. J., Beidas, R. S., Lewis, C. C., Aarons, G. A., McMillen, J. C., Proctor, E. K., & Mandell, D. S. (2017). Methods to improve the selection and tailoring of implementation strategies. The Journal of Behavioral Health Services & Research, 44(2), 177–194. https://doi.org/10.1007/s11414-015-9475-6

Raine, R., Fitzpatrick, R., Barratt, H., Bevan, G., Black, N., Boaden, R., Bower, P., Campbell, M., Denis, J.-L., Devers, K., Dixon-Woods, M., Fallowfield, L., Forder, J., Foy, R., Freemantle, N., Fulop, N. J., Gibbons, E., Gillies, C., Goulding, L., & Zwarenstein, M. (2016). Challenges, solutions and future directions in the evaluation of service innovations in health care and public health. Health Services and Delivery Research, 4(16), 1–136.

Robinson, C. H., & Damschroder, L. J. (2023). A pragmatic context assessment tool (pCAT): Using a think aloud method to develop an assessment of contextual barriers to change. Implementation Science Communications, 4(1), 3. https://doi.org/10.1186/s43058-022-00380-5

Rogers, P. J. (2008). Using programme theory to evaluate complicated and complex aspects of interventions. Evaluation, 14(1), 29–48. https://doi.org/10.1177/1356389007084674

Rycroft-Malone, J., Wilkinson, J. E., Burton, C. R., Andrews, G., Ariss, S., Baker, R., Dopson, S., Graham, I., Harvey, G., Martin, G., McCormack, B. G., Staniszewska, S., & Thompson, C. (2011). Implementing health research through academic and clinical partnerships: A realistic evaluation of the Collaborations for Leadership in Applied Health Research and Care (CLAHRC). Implementation Science, 6(1), 74. https://doi.org/10.1186/1748-5908-6-74

Scaccia, J. P., Cook, B. S., Lamont, A., Wandersman, A., Castellow, J., Katz, J., & Beidas, R. S. (2015). A practical implementation science heuristic for organizational readiness: R = MC2. Journal of Community Psychology, 43(4), 484–501. https://doi.org/10.1002/jcop.21698

Scott, V. C., Alia, K., Scaccia, J., Ramaswamy, R., Saha, S., Leviton, L., & Wandersman, A. (2019). Formative evaluation and complex health improvement initiatives: A learning system to improve theory, implementation, support, and evaluation. American Journal of Evaluation, 41(1), 89–106. https://doi.org/10.1177/1098214019868022

Scott, V. C., Gold, S. B., Kenworthy, T., Snapper, L., Gilchrist, E. C., Kirchner, S., & Wong, S. L. (2021). Assessing cross-sector stakeholder readiness to advance and sustain statewide behavioral integration beyond a State Innovation Model (SIM) initiative. Translational Behavioral Medicine, 11(7), 1420–1429. https://doi.org/10.1093/tbm/ibab022

Scott, V. C., Kenworthy, T., Godly-Reynolds, E., Bastien, G., Scaccia, J., McMickens, C., Rachel, S., Cooper, S., Wrenn, G., & Wandersman, A. (2017). The Readiness for Integrated Care Questionnaire (RICQ): An instrument to assess readiness to integrate behavioral health and primary care. American Journal of Orthopsychiatry, 87(5), 520–530. https://doi.org/10.1037/ort0000270

Shrestha, R., Karki, P., Altice, F. L., Dubov, O., Fraenkel, L., Huedo-Medina, T., & Copenhaver, M. (2018). Measuring acceptability and preferences for implementation of Pre-Exposure Prophylaxis (PrEP) using Conjoint Analysis: An application to primary HIV prevention among high risk drug users. AIDS and Behavior, 22(4), 1228–1238. https://doi.org/10.1007/s10461-017-1851-1

Stanick, C. F., Halko, H. M., Nolen, E. A., Powell, B. J., Dorsey, C. N., Mettert, K. D., Weiner, B. J., Barwick, M., Wolfenden, L., Damschroder, L. J., & Lewis, C. C. (2019). Pragmatic measures for implementation research: Development of the Psychometric and Pragmatic Evidence Rating Scale (PAPERS). Translational Behavioral Medicine, 11(1), 11–20. https://doi.org/10.1093/tbm/ibz164

Swierad, E., Huang, T.T.-K., Ballard, E., Flórez, K., & Li, S. (2020). Developing a socioculturally nuanced systems model of childhood obesity in Manhattan’s Chinese American community via group model building. Journal of Obesity, 2020, e4819143. https://doi.org/10.1155/2020/4819143

Valenta, S., Ribaut, J., Leppla, L., Mielke, J., Teynor, A., Koehly, K., Gerull, S., Grossmann, F., Witzig-Brändli, V., De Geest, S., on behalf of the SMILe study team. (2023). Context-specific adaptation of an eHealth-facilitated, integrated care model and tailoring its implementation strategies—A mixed-methods study as a part of the SMILe implementation science project. Frontiers in Health Services. https://doi.org/10.3389/frhs.2022.977564

Waltz, T. J., Powell, B. J., Fernández, M. E., Abadie, B., & Damschroder, L. J. (2019). Choosing implementation strategies to address contextual barriers: Diversity in recommendations and future directions. Implementation Science, 14(1), 42. https://doi.org/10.1186/s13012-019-0892-4

Waltz, T. J., Powell, B. J., Matthieu, M. M., Damschroder, L. J., Chinman, M. J., Smith, J. L., Proctor, E. K., & Kirchner, J. E. (2015). Use of concept mapping to characterize relationships among implementation strategies and assess their feasibility and importance: Results from the Expert Recommendations for Implementing Change (ERIC) study. Implementation Science, 10(1), 109. https://doi.org/10.1186/s13012-015-0295-0

Watson, A. K., Hernandez, B. F., Kolodny-Goetz, J., Walker, T. J., Lamont, A., Imm, P., Wandersman, A., & Fernandez, M. E. (2022). Using implementation mapping to build organizational readiness. Frontiers in Public Health. https://doi.org/10.3389/fpubh.2022.904652

Wichmann, F., Braun, M., Ganz, T., Lubasch, J., Heidenreich, T., Laging, M., & Pischke, C. R. (2020). Assessment of campus community readiness for tailoring implementation of evidence-based online programs to prevent risky substance use among university students in Germany. Translational Behavioral Medicine, 10(1), 114–122. https://doi.org/10.1093/tbm/ibz060

Funding

Robert Wood Johnson Foundation, 35497, Wandersman Center, 74609, Kaiser Permanente, 20151254.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Scott, V.C., LaMarca, T.K., Hamm, D. et al. The ART of Readiness: A Practical Tool for Implementation Tailoring at the Activity Level. Glob Implement Res Appl 4, 139–150 (2024). https://doi.org/10.1007/s43477-023-00115-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s43477-023-00115-1