Image Credit: “Mixture of Volumetric Primitives for Efficient Neural Rendering” © 2021 Facebook Reality Labs

SIGGRAPH sat down with Stephen Lombardi, research scientist at Facebook Reality Labs, to talk about his team’s SIGGRAPH 2021 Technical Paper “Mixture of Volumetric Primitives for Efficient Neural Rendering.” The paper is a follow-up to the team’s SIGGRAPH 2019 research on neural volumes. The basis of their 2021 research, “volumetric primitives”, is that it allows one to model a scene using a collection of smaller, moving voxel grids rather than just one large 3D voxel grid, like was the case in the neural volumes research. Read on to hear how Stephen’s team used their 2021 research to solve a 2019 problem.

SIGGRAPH: Tell us about the process of developing “Mixture of Volumetric Primitives for Efficient Neural Rendering”. What inspired your team to pursue this research topic?

Stephen Lombardi (SL): This paper is largely a follow-up to our paper “Neural Volumes: Learning Dynamic Renderable Volumes from Images” from SIGGRAPH 2019. In Neural Volumes, we proposed a method for reconstructing and rendering moving objects from novel views in real time given only multi-view image data. This is a really exciting research area because it will enable compelling, interactive content in virtual and augmented reality.

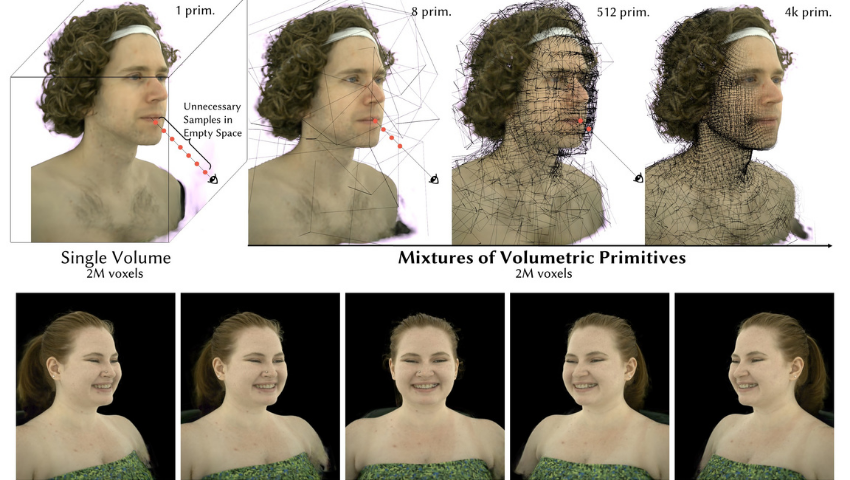

The main idea behind “Neural Volumes” was to model scenes with a volumetric representation consisting of an RGB color and opacity value for each point in space. For that particular paper, we explored a voxel-based volumetric representation. Voxel-based methods have a number of advantages. For one, we can generate voxel grids in real time using 3D convolutions, allowing us to model dynamic scenes. Second, we can sample the color and opacity values of the volume very quickly using trilinear interpolation. These advantages allow “Neural Volumes” models to be rendered in real time. However, “Neural Volumes” distributes voxels uniformly across the 3D extent of the scene which makes it difficult to model objects with high resolution.

To address this, we came up with “Mixture of Volumetric Primitives”. Rather than modeling the scene with one large 3D voxel grid, we model it with a collection of smaller, moving voxel grids that we call volumetric primitives. By allowing the model to better control the density of voxels in different parts of the scene and model the motion of the scene with the motion of the primitives, “Mixture of Volumetric Primitives” is able to model dynamic scenes with higher resolution and at a faster framerate than “Neural Volumes”.

SIGGRAPH: Let’s get technical. How did you create the collection of moving volumetric primitives? How many people were involved? How long did it take? What was the biggest challenge? Why was real-time a critical element in the process?

SL: There are two main parts to creating the collection of moving volumetric primitives: initialization of the primitives, and the learning framework to train the system from multi-view video data.

For initialization, we use classical face modeling techniques (e.g., keypoint detection, 3D reconstruction, blendshape tracking) to produce a dynamic triangle mesh of a person’s face. To initialize the primitives, we simply place them across the surface of the triangle mesh, evenly distributed in the UV space of the face mesh. This initialization is important to getting really high-quality results, because the learning framework may get stuck in a local minimum during training. By initializing the primitives to be evenly distributed across the surface of the face, we ensure that all the primitives are used and the resolution of the model is roughly similar across the face.

Although our initialization gets us a good initial placement for many of the volumetric primitives (especially those on the face), it is often wrong for poorly-tracked areas like the hair and shoulders. To address this, we train the model to produce the primitive locations, orientations, and content (i.e., the RGB color and opacity for the primitive voxel grids) to best match the images we captured from our multi-view capture system. This training process allows us to produce really high-quality renderings of people from arbitrary viewpoints.

As you can imagine, there are a large number of people involved given all these steps. There’s a large team managing the capture system hardware and software, teams managing the data capture process, and teams managing storage and pre-processing of the data (e.g., developing and running the classical face tracking algorithms) in addition to our research team that developed the algorithms. This paper is really the result of years of hard work from our lab in Pittsburgh, Pennsylvania led by Yaser Sheikh.

The biggest challenge was the (often uncertain) research directions we decided to explore that eventually led to this method. Although learnable volumetric modeling and rendering techniques have become popular in the past few years, at the time of “Neural Volumes” it was unknown how sucessful these approaches would be. Even now, we’re trying to make the real time performance of “Mixture of Volumetric Primitives” competitive with more traditional representations like triangle meshes but it is difficult given the complexity of it over those models.

The reason why real-time is so important for us is that the mission of our group is to create lifelike avatars in VR and eventually AR that enable you to feel that you are together in the same room as someone else and that you are able to communicate your ideas and emotions effortlessly — and not just through speech, but also through your facial expressions, body gestures, tone of voice, and so on. Today’s videoconferencing technologies fall short on that promise, as became clear during the pandemic. In line with Meta’s mission, our goal is to make social interactions in AR/VR feel as natural as in-person interactions, and allow people who are geographically far apart, to feel physically present with one another.

SIGGRAPH: What do you find most exciting about the final product you presented to the SIGGRAPH 2021 community?

SL: The most exciting part for us right now is the quality of the results and potential for improving the model in the future. In particular, looking at the “Mixture of Volumetric Primitives” avatars in VR is a very compelling experience!

SIGGRAPH: What is next for “Mixture of Volumetric Primitives for Efficient Neural Rendering”? How do you envision this rendering process will be used in the future?

SL: There’s a number of aspects that we’d like to improve on, including the rendering quality and speed. This will help allow us to run the model in real time on embedded devices, for example. We also plan to extend this model to enable relighting and to move from only face/upper body rendering to full body rendering. We definitely envision using this model for social telepresence, but we’re also excited to see how the community will extend this work, perhaps improving how the volumetric primitives fit the data or by extending it to other types of scenes.

SIGGRAPH: If you’ve attended a SIGGRAPH conference in the past, share your favorite SIGGRAPH memory.

SL: My favorite SIGGRAPH memory was during my first SIGGRAPH, in 2018, when I presented one of our first papers toward creating a social telepresence system. This was an exciting SIGGRAPH for me because it was my first, I was presenting a talk, and it was also my first time in Vancouver.

SIGGRAPH: Technical Papers submissions just opened for SIGGRAPH 2022, what advice do you have for someone looking to submit to the program for next year’s conference?

SL: Start early and if your paper is accepted, make a funny fast forward video!

SIGGRAPH 2022 Technical Papers submissions are open. This year, there are two ways to submit. Learn more about the program and submit your research today!

Stephen Lombardi is a research scientist at Reality Labs, Meta in Pittsburgh. He received his Bachelor’s in computer science from The College of New Jersey in 2009, and his Ph.D. in computer science, advised by Ko Nishino, from Drexel University in 2016. His doctoral work aimed to infer physical properties, like the reflectance of objects and the illumination of scenes, from small sets of photographs. At Reality Labs, he develops realistic, driveable models of human faces by combining deep generative modeling techniques with 3D morphable models. His current research interests are the unsupervised learning of volumetric representations of objects, scenes, and people.