“Sense of Embodiment Inducement for People With Reduced Lower-body Mobility and Sensations With Partial-visuomotor Stimulation” © 2022 Korea Advanced Institute of Science and Technology (KAIST)

A team from the Korea Advanced Institute of Science and Technology (KAIST) demonstrate an upper-body motion tracking-based partial-visuomotor technique to induce sense of embodiment (SoE) for people with reduced lower-body mobility and sensation (PRLMS) patients. Read about this innovative project below, and see more at SIGGRAPH 2022’s Emerging Technologies session on Wednesday, 10 August.

SIGGRAPH: Tell us about the process of developing “Sense of Embodiment Inducement for People With Reduced Lower-body Mobility and Sensations With Partial-visuomotor Stimulation.” What inspired your team to pursue this technology?

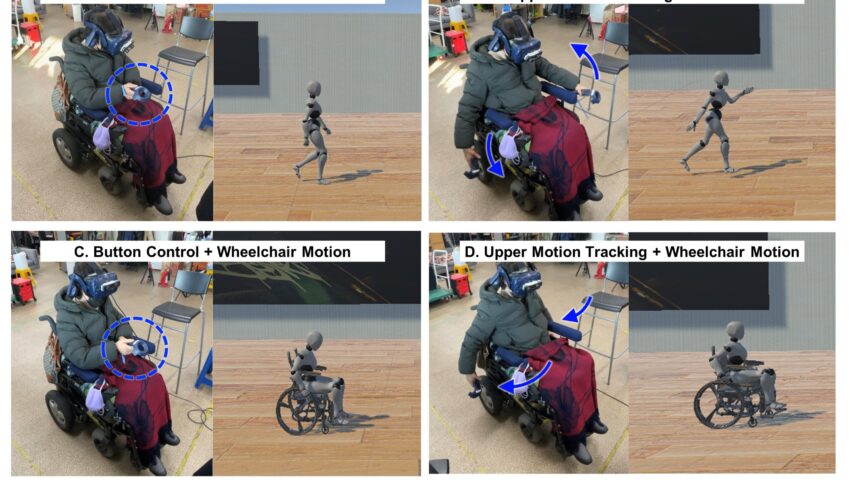

Hyuckjin Jang (HJ): I participated in a project that implements an augmented (AR)-based sign language translation system for deaf and hard-of-hearing (DHH) people. Since then, I have become interested in the extended reality (XR) technology interface for people with special needs. Mainly, I am interested in how avatars and users interact in virtual reality (VR). Taehei was focused specifically on generating human motion and representation in VR space layout using VR devices. During our conversation, we realized that the most usual way of inducing the sense of embodiment in virtual reality is to use synchronous multisensory feedback like visuomotor and visuotactile. We also discovered that some work generates lower-body motion only, using partial inputs such as button control or arm-swing. In this circumstance, we raised questions about how people unable to move their bodies could feel a sense of embodiment. We wondered — while many avatar interactions in virtual reality content mainly focus on the standing body of abled people, would the same mechanism work on disabled people, especially PRLMS people?

SIGGRAPH: What was the biggest challenge you faced in its development?

Taehei Kim (TK): The biggest challenge we faced was to put forth a positive impression of the proposed method to our study participants. Since neither of us suffers from reduced lower-body mobility and sensation, we were concerned whether our proposed method would hurt the feelings of people with a partial disability. Walking might seem necessary for non-PRLMS, but that might not be the case for PRLMS people. This new representation might cause a sense of deprivation when observing their virtual selves walk when they cannot walk in real life. Moreover, recruiting participants was difficult. Our experiment was carried out offline, as PRLMS people with mobility issues had to put in much more effort to come to the lab, and the COVID-19 situation made it more challenging for them to do so. We received help from the local hospital and the Daejeon Association of Persons with Physical Disabilities. Thankfully, many of our participants showed satisfaction and were willing to share their opinions about our work.

SIGGRAPH: How does “Sense of Embodiment” contribute to the greater accessibility and adaptability of conversation? How does it advance adaptive technology?

TK, HJ, Sang Ho Yoon (SHY): We hope the larger community focuses on the power of virtual reality that enables us to achieve things that might not be possible in the real environment, not only for non-PRLMS people but even more so for PRLMS people. VR can provide an experience that PRLMS people have difficulty achieving in the real environment, such as walking.

The problem is that the avatar embodiment is a crucial component in the immersive VR experience since the avatar connects the user with the virtual environment. However, as we mentioned earlier, recent methods have mainly focused on physically abled people, which might restrict the PRLMS from having a more immersive VR experience. Therefore, more inclusive approaches to induce avatar embodiment are needed.

In this sense, we believe our work can share insight into the difference in body movement between PRLMS and non-PRLMS people. For example, initially we thought that the upper body motion range of the PRLMS people would not be much different compared to the non-PRLMS people. However, through the studies, we realized that partial disability differs from person to person, which causes a precise adjustment for each person.

SIGGRAPH: What do you find most exciting about the final product that you will present at SIGGRAPH 2022?

TK, HJ, SHY: We anticipate that our system will connect us with various people interested in developing technology for people with special needs. The majority of the disability is acquired from sudden accidents or illness. Therefore, we believe inclusive technology is not a minor issue but an issue for all. Technology for greater accessibility needs collaboration between people from diverse fields. We imagine our work is only one example.

We look forward to providing audiences a moment to think about accessibility and the need for a more inclusive and creative VR system with a relatively simple set up.

SIGGRAPH: What’s next for “Sense of Embodiment”?

TK, HJ, SHY: We seek to develop different lower-body activities for PRLMS people. During the experiments, we asked participants what kind of activity they want to do in VR. Many participants missed traveling freely to different locations when their lower body movement was not restricted. So, we are looking into climbing or swimming activity for PRLMS people. We would like to learn more about how PRLMS reacts to dynamic and out-of-the-ordinary body motions used by superheroes, such as Spider-Man jumping over skyscrapers.

SIGGRAPH: What advice do you have for someone planning to submit to Emerging Technologies at a future SIGGRAPH conference?

TK, HJ, SHY: Participating in Emerging Technologies is an excellent opportunity to confidently display your interests. It is most attractive because you can meet potential users of your technology in one place. We encourage all to submit their work and share their voices!

The latest innovations are at SIGGRAPH 2022! Register now to join us in person in Vancouver, 8-11 August, or online starting 25 July.

Taehei Kim is a second-year Ph.D. candidate in the Department of Culture Technology, Korea Advanced Institute of Science and Technology (KAIST) in South Korea and joint first author of this work. Her interest lies in natural human motion generation and representation in virtual reality. She focuses on the adaptive avatar’s motion in diverse VR spaces.

Hyuckjin Jang is a M.S. student in the Department of Culture Technology, KAIST in South Korea and joint first author of this work. He is interested in how avatar interaction and virtual environment could affect users’ cognition and emotion in reality. He seeks to quantify the user’s cognitive reaction in virtual reality using physiological data such as EEG and eye-tracking and derive meaningful insight. Based on that, he dreams of developing inclusive technologies to assist those with special needs through virtual reality.

Sang Ho Yoon is an assistant professor in the Graduate School of Culture Technology at KAIST. His current research focuses on human-computer interaction (HCI) including physical computing, natural user interface, and socially acceptable interactions. Sang Ho Yoon received his Ph.D. in mechanical engineering from Purdue University in 2017 and a B.S. and M.S. in mechanical engineering from Carnegie Mellon University in 2008. Before KAIST, he spent time at Samsung Research as a principal engineer and at Microsoft as a research engineer working on novel sensing and haptic systems for future interfaces and devices.

Leave a Reply