“GAN-based AI Drawing Board for Image Generation and Colorization” © 2020 PAII Inc., Ping An Technology

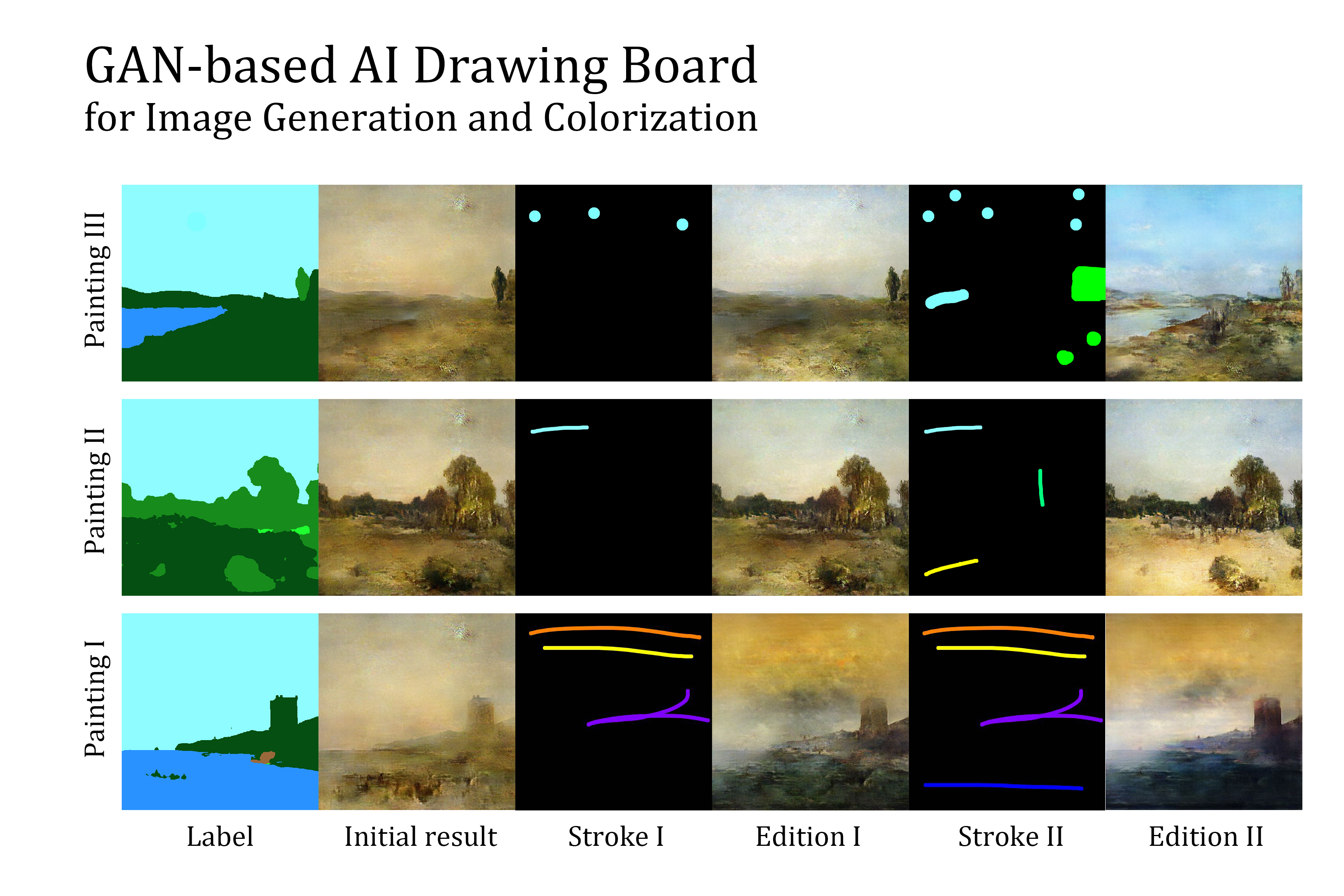

The virtual SIGGRAPH 2020 conference is fast approaching and that means a chance to explore, discover, and hear from top computer graphics researchers over the next two weeks (17–28 August) and — because it’s virtual — revisit novel, interesting ideas at your leisure until the conference signs off on 27 October. Part of a collection of research in the SIGGRAPH 2020 Posters program on Image Processing & Computer Vision, “GAN-based AI Drawing Board for Image Generation and Colorization” incorporates colorization into generation via a novel and lightweight feature embedding method, enabling the creation of pictures or paintings with semantics and color control in real time. We sat down with one of the lead researchers on the project, Minghao Li of PAII Inc., to learn more about the team’s methods.

SIGGRAPH: Talk a bit about the process for developing the research for your poster, “GAN-based AI Drawing Board for Image Generation and Colorization”. What inspired the focus/topic?

Minghao Li (ML): As the AI-painting group in PAII.Inc, we are researching cutting-edge generation approaches and thriving to narrow the edges between artificial intelligence and the art world, by delivering our technology to fine arts and involving more people into arts.

[To develop our research,] we asked art-related questions of professionals — either artists who own galleries or professors who lead students in starting their journeys: How do you think AI could help the arts? What do you think might be the difficulties in your creation or teaching process that stop people from getting closer to the arts? Surprisingly, professionals shared one common conclusion: If AI could somehow free people from the redundant drawing process and focus on thoughts, and later achieve in detail on for their best choice, AI would make art easier and more hands-on for everybody.

We summarize it as INTERACTION and REPLACEMENT of the pure drawing with AI. And, thanks to our GAN (Generative Adversarial Network) community, lots of researchers have published fantastic generative papers. Especially Taesung Park, etc., who showed their great demo to help people generate images from simple semantic inputs. With that overall purpose, our thoughts flowed from there. We wanted to achieve a fast and intuitive approach to help people generate and edit paintings in both semantic and color dimension, much like the art creation process!

SIGGRAPH: Let’s get technical. How did you develop the GAN-based technique?

ML: We have five people involved in the project — me (Minghao Li), Yuchuan Gou, Bo Gong, Jing Xiao, and Mei Han — and it is thanks to the effort of everyone in this group that we were able to overcome any challenges and achieve our final results. We also appreciate Larry Lai and Jinghong Miao for the paperwork.

One big challenge along the journey was effective colorization. We wanted to incorporate the colorization into the generation process while not affecting model size and generation time. We first attempted to make stroke/color inputs into the encoder of the generator but failed, with too many sparse values as 0 because the colors only occupy a small portion of the whole image, which covers the control of few color regions. Credit is given to YOLO, as it inspired us to use the feature representation approach in order to help convert the color inputs into the dense matrix. From there, we had semantic inputs come in to control the paintings together.

At last, we tuned the hyperparameters for the most lightweight feature representation approach while achieving the control. This makes our system run fast for the generation process. Anyone reading this is welcome to review our abstract for further technical details and results!

SIGGRAPH: What do you find most exciting about the final research you are presenting to the virtual SIGGRAPH 2020 community?

ML: Of course, we believe we have fantastic results to show our successful control over the painting generation process; however, to be honest, the most exciting part of our final research is not only the control effects, but also the concept that incorporates colorization into generation to help the public paint easier and faster. Similarly, after I read research work from SIGGRAPH — in 3D, virtual reality, etc. — I always have the feeling it’s not all about techniques, but also inspires brand-new ideas for an industry-level challenge to make human life better. So, we welcome you for any thoughts or art concepts!

SIGGRAPH: What’s next for “GAN-based AI Drawing Board for Image Generation and Colorization”?

ML: First of all, in the technical view, we plan to make our drawing board more robust by designing more flexible feature representation approaches and collecting more fine arts for our network to learn from. In the long term, as I shared, we would be happy to see such an application come alive and help more public involved in arts.

SIGGRAPH: Tell us what you are most excited about for your first-ever SIGGRAPH experience!

ML: I really appreciate our work together as a team and recognition from the SIGGRAPH conference. I look forward to learning what the academy and industry are doing in the computer graphics field and to communicating with experts.

SIGGRAPH: What advice do you have for someone looking to submit a poster to a future SIGGRAPH conference?

ML: This is a large question, to be honest, since I am not a reviewer. I can only share something that we did during the journey. The keywords are ideas, technical development, and results demonstration. We especially did lots of experiments on multiple datasets to demonstrate our results and improve them through some training strategies. Translating your work to a 2-page abstract is also important.

SIGGRAPH 2020 Posters are open to Enhanced and Ultimate participants. Register for the conference today to discover this and many other in-progress research projects.

Minghao Li is a software developer from PAII Inc. in California. He is actively researching in GAN, computer graphics, and video understanding, and has achieved state-of-art results in the generation approach from text and semantic inputs and published a paper to content creation workshop at CVPR 2020, winning the best paper award. Li graduated from Columbia University with a master’s degree, where he focused mainly on deep learning and software development, with wide interests and experiences in IoT, Cloud services, and large-scale data processing.